AI MVP Development: How to Build, Validate, and Ship AI Products Fast

February 2, 2026 - 12 min read

AI brings very high hopes, but also a lot of uncertainty, into virtually every branch of business.

On one hand, the potential and hype are immense. Tools like OpenClaw can get hundreds of thousands of stars on GitHub within 30 days of release, and builders in the space see monumental opportunities.

On the other hand, researchers and business leaders remain sceptical because AI is still barely showing returns. Out of 4,456 CEOs surveyed by PwC, only 30% said their company had realised tangible results from AI adoption.

Despite these contrasting views, the global consensus is to keep experimenting. The largest companies have already set the direction for the future, and it is AI.

Speeches at the latest World Economic Forum suggest that Big Tech CEOs already view AI as a general commodity, similar to electricity. In the future economy, the winners will be those who can maximize token-to-dollar efficiency.

To achieve that, teams need viable use cases and the ability to test ideas fast. The days of massive AI budgets with no clear plan are slowly fading, and to find strong use cases, companies need to ideate and ship quickly to keep spend under control.

In this article, we are going to talk about how to build AI MVPs, what the prerequisites are, and how to ship them fast.

What is an AI MVP?

An AI MVP is the earliest version of a product that uses AI or large language models to solve a specific problem. The solution should include only the essential features needed to satisfy early adopters and provide a minimally usable interface.

Unlike traditional MVPs, which aim to validate the core concept of an app quickly, an AI MVP validates the AI-driven value of the solution, such as recommendations, automation, and personalization, with real users to gather feedback and assess potential profit and efficiency gains.

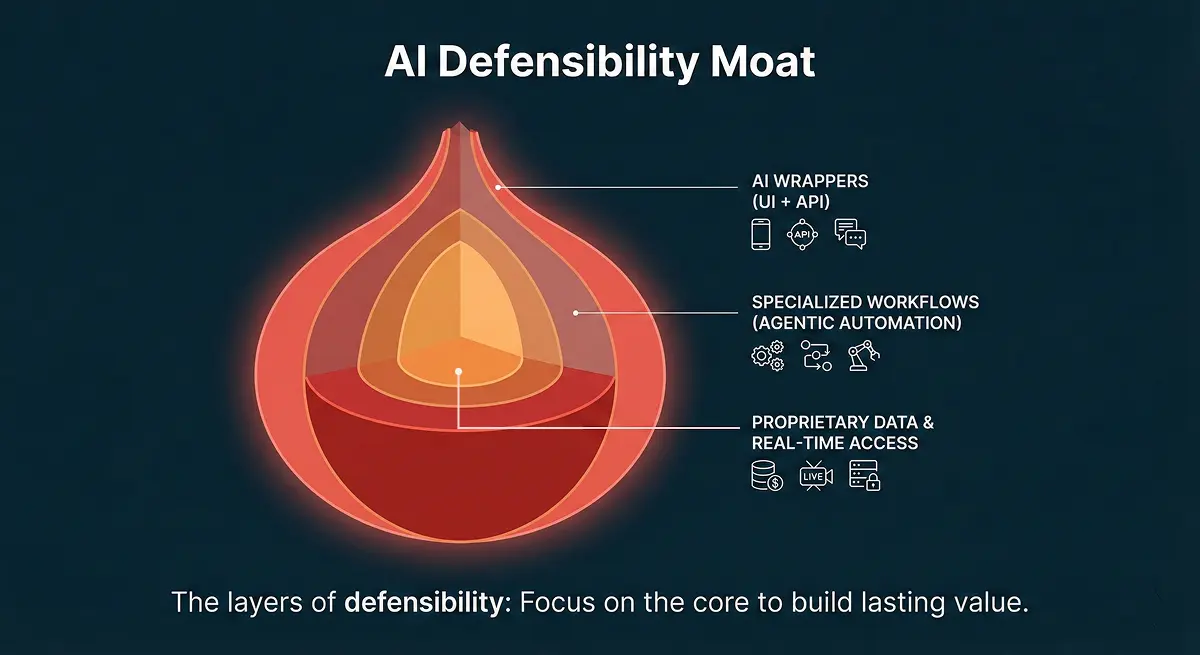

From wrappers to AI-native solutions

One reason many companies are not seeing returns from AI is that they treat it as a self-contained feature. That could work in 2024, when the hype was just starting, and being a simple layer between an LLM API and a user was enough to attract paying customers.

However, as adoption has grown, that approach no longer holds. First, the big AI companies are not leaving much room for others to profit from low-hanging opportunities. OpenAI is pushing some products out of the market faster than most people can track. A few examples:

- With the introduction of a GPT store, many generic wrapper products became less viable because users could build and use them inside ChatGPT, often included in the lower-tier plans. Users didn’t have to pay twice for ChatGPT and a separate “Writing assistant”, for example.

- With the recent release of AI agent builder, OpenAI also hit AI automation agencies that relied on third-party tools like n8n or Make to build workflows. Now similar capabilities are available inside OpenAI’s platform in a no-code environment, so users can build automations and agents themselves.

And these examples are not limited to OpenAI. Nearly every AI company is trying to consolidate its user base and offer as many services as possible, so users have fewer reasons to leave their platforms.

How to differentiate?

How to differentiate?

In this market, differentiation comes down to proprietary data and your ability to connect AI to it, process it, and turn it into useful insights or a product that people will actually pay for.

If you do not have proprietary data, the next best differentiator is access. That means having access to large volumes of valuable data that is hard to use because it is unstructured, scattered across multiple services, or difficult to export. In practice, this often looks like being able to collect or scrape data in near real time and feed it into AI for processing.

Examples:

- A logistics company can use its fleet telemetry and historical delivery data to predict delays and optimize routes in real time.

- If you don’t own the data, you can still win by aggregating it. For example, you could monitor product reviews across dozens of marketplaces where exports are limited, or pull regulatory updates from multiple government sites and turn them into a weekly executive brief.

Define the Problem and MVP Hypothesis

The reason this step matters is simple: most companies still aren’t seeing AI returns. Previously mentioned PwC’s 2026 Global CEO Survey shows 56% of CEOs report no significant financial benefit from AI so far.

Gartner expects 40% of Agentic AI projects to be canceled by the end of 2027, often due to unclear business value, poor data quality, risk controls, or rising costs. So if the problem isn’t clear and measurable, the MVP will struggle.

Treat AI MVP as a pared-down product that solves one core problem and validates value before you scale.

Start with a high-friction business process and turn it into a testable hypothesis:

- What exact business problem are we solving?

- How is it solved today, and where are the bottlenecks?

- What measurable improvement will AI deliver compared to the current method?

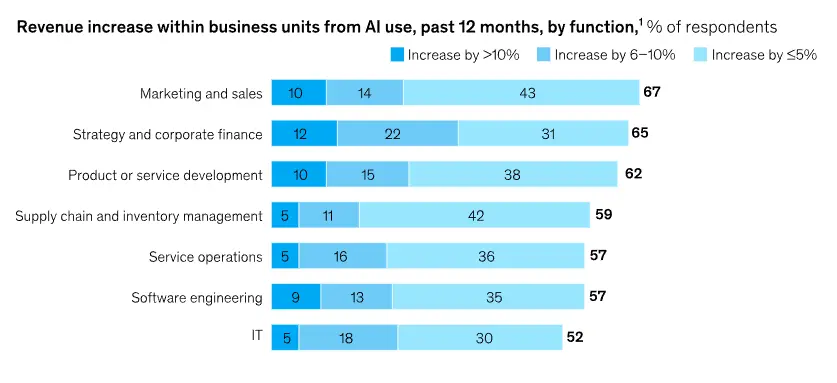

Use proven value zones as a filter. McKinsey’s global AI survey reports revenue gains most often in:

- marketing and sales

- product and service development

- supply-chain management

Cost savings are usually concentrated in manufacturing and supply chain. That doesn’t mean every use case should sit in those areas, but it’s a smart sanity check.

Cost savings are usually concentrated in manufacturing and supply chain. That doesn’t mean every use case should sit in those areas, but it’s a smart sanity check.

Next, test your data before you commit. If the data is not reliable, the hypothesis is not either.

A simple MVP hypothesis for you AI project could be:

“If we apply AI to [specific data] to automate or augment [specific task], we will improve [baseline KPI] by [target %] within [timeframe], without increasing [cost/risk].”

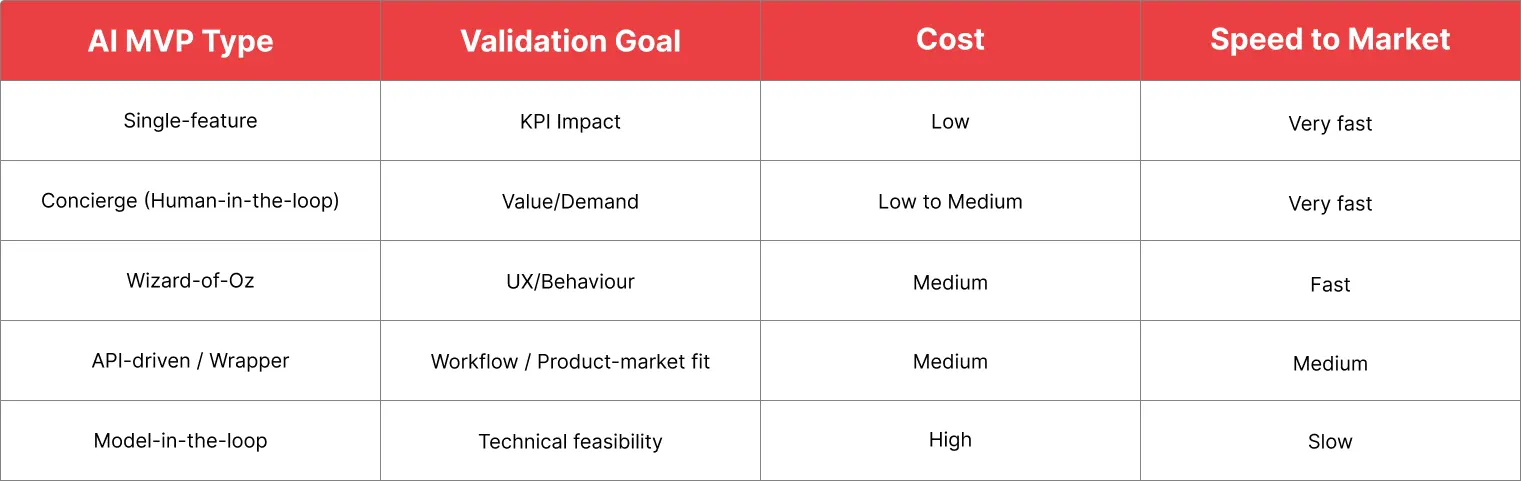

Choose the Right MVP Type and Scope

When developing an AI MVP, teams usually have a few practical options. Each one is a trade-off between speed, risk, cost, and credibility with users. The trick is to pick the lightest MVP that can still prove (or disprove) your core hypothesis.

A useful way to decide is to ask: What are you trying to validate first? Use the matrix below to guide your decision.

Below are the MVP types, with what they’re best for and how to scope them without accidentally building a full product.

Below are the MVP types, with what they’re best for and how to scope them without accidentally building a full product.

MVP Type 1: Single-Feature (Product-First)

Use this when you already have data access and a clear path to automate one high-impact task. The goal is to validate that the AI-driven feature moves a real KPI, not to build a full platform.

What to scope tightly

- One user persona, one workflow, one “job to be done”

- One KPI (conversion, retention, cost-to-serve, time saved, etc.)

- Clear fallback behavior when the AI is unsure (don’t block the workflow)

Common failures

Teams ship a “single feature” that in the background requires 6 other features (admin tools, permissions, monitoring, analytics, integrations). If you need those, stub them.

MVP Type 2: Concierge (Human-in-the-Loop)

This type is used to validate demand before automating. Teams manually deliver the outcome while capturing data, expectations, and edge cases. It is slower to scale but faster to learn, and it de-risks model investment.

What to scope tightly

- Deliver the outcome manually, but standardize the intake (forms/templates)

- Collect structured feedback after every delivery (what was wrong/missing?)

- Track “time per job” and what makes jobs expensive, those become automation targets

Common failures

Concierge becomes a bespoke service business. Put guardrails around what you will/won’t support so you learn repeatable patterns instead of one-off requests.

MVP Type 3: Wizard-of-Oz (Automation Illusion)

This type is used when user experience matters most and you need realistic usage data. The front end looks automated, but humans or lightweight scripts handle the backend. It lets teams test willingness to pay before building costly infrastructure.

What to scope tightly

- Make the UI feel “real” (response times, states, error messages)

- Keep the backend manual but instrument everything (what users ask, when they churn)

- Define strict SLAs so the illusion doesn’t break (e.g., “response within 2 minutes”)

Common failures

Overpromising automation. If users feel tricked, they quickly lose trust in the product, so any claims about automation should be well thought-out. You can position it as “assisted” or “early access” while still simulating the workflow.

MVP Type 4: API-Driven / Wrapper MVP (Leverage Existing Models)

This is used to quickly prove a use case without training a proprietary model. Teams rely on existing AI APIs and focus on the workflow, prompting, UI, and guardrails.

What to scope tightly

- Start with one or two “golden paths” (most common user flows)

- Add lightweight safety + reliability layers:

- input/output constraints

- citation/trace of sources (if applicable)

- human fallback for uncertain cases

Common failures

Mistaking “it works in a demo” for “it works in production.” Wrappers often need retrieval, memory rules, evals, and monitoring earlier than teams expect, so scope those as minimal, not optional.

MVP Type 5: Model-in-the-Loop Prototype (Early ML in Production)

Use this when the model quality itself is the biggest unknown, and you need real data to improve. Teams ship a rudimentary model (or a baseline approach) into a controlled slice of production to gather labeled feedback and learn where it breaks.

What to scope tightly

- Restrict to low-risk decisions or “assistive mode”

- Add explicit user feedback hooks (thumbs up/down + reason codes)

- Build a small evaluation loop from day one (even if manual)

Common failures

Shipping a model with no learning loop. If you don’t collect labels/feedback systematically, you’ll stall after the first release.

Data and Model Foundations

If the data is weak, the MVP fails, even with a strong model. Gartner predicts 60% of AI projects unsupported by AI‑ready data will be abandoned through 2026. That is why data readiness is one of the top priorities when planning an AI MVP.

Start with data readiness:

- Define the minimum viable dataset: what exact fields, formats, and freshness do you need to deliver the outcome?

- Map data ownership and access rights. If you can’t legally or operationally access the data, the MVP cannot ship.

- Validate representativeness and edge cases early. AI fails on outliers, not averages.

- Put DataOps and observability in place for drift and quality monitoring

If you want a deeper checklist, our guide on the six pillars of data readiness for AI breaks this down in a more detailed way.

Model strategy comes next

For an MVP, you rarely need the most complex model. You need the most reliable path to a measurable business outcome. Start with a single model and keep the evaluation loop tight, with a baseline, a small test set, clear failure modes, and a human override. The “must-have” AI functionality should validate value before you invest in full-scale automation.

For an AI MVP, human-in-the-loop should be mandatory. If a decision can create financial, compliance, or reputational risk, put a human checkpoint into the workflow. Define when AI can act autonomously, when it needs approval, and how exceptions are routed. This is also where ISO 27001-aligned controls, audit trails, and policy enforcement help protect the business.

AI MVP Build Workflow

The fastest AI MVPs are built with a tight loop:

Define → build → test → learn → decide.

You are not building a perfect system. You are building a proof of business value with the smallest surface area possible.

Step 1: Define the workflow before the model

Document the exact workflow where AI creates value. Who triggers it, what data it needs, what output is required, and who approves it. This also tells you where to place guardrails and which systems you need to integrate first.

Step 2: Prototype the AI core

Start with a thin backend and a single model. Use a small test set and a small set of evaluation metrics tied to the business KPI. If you can’t measure improvement, the MVP can’t prove value.

Step 3: Build the minimum interface

Your UI should do only what’s needed to validate usage and willingness to pay. Avoid dashboards and advanced workflows until the core outcome is proven.

Step 4: Instrument everything

Log inputs, outputs, user actions, and overrides. Track model confidence, latency, and error types. This becomes your learning engine and protects the business from blind spots.

Step 5: Tight iteration cadence

Ship weekly, review outcomes weekly, and make decisions fast. If the AI doesn’t move the KPI after 2–3 iterations, pause and re-scope.

Common Pitfalls and Risk Controls

Most AI MVPs fail for reasons that are predictable. Gartner expects 30% of GenAI projects to be abandoned after proof of concept by the end of 2025, often due to unclear business value, poor data quality, risk controls, or rising costs (Gartner, July 2024). Use these as your risk checklist.

Pitfall 1: You validate tech, not value

If the KPI doesn’t move, the MVP is a demo, not a product. Tie success to cost reduction, revenue lift, cycle time, or compliance risk reduction.

Pitfall 2: Data access is treated as a backend task

AI depends on clean, timely, and legally usable data. Lock access and governance before you spend on model work.

Pitfall 3: Scope creep kills speed

Teams add features to “make it usable.” The result is a slow, expensive MVP that never reaches a decision point. Keep to one outcome.

Pitfall 4: No guardrails for failure modes

Hallucinations, bias, and privacy errors can destroy trust. Add human‑in‑the‑loop, confidence thresholds, and exception routing from day one.

Pitfall 5: Cost grows faster than value

Tokens, infra, and vendor fees can outpace the benefit. Track unit economics early and design for token-to-dollar efficiency.

Controls that de-risk the MVP

AI MVPs fail most often for boring reasons: nobody agrees on what “good” looks like, risks aren’t managed early, and teams keep shipping even when results are flat. You can avoid most of that with three lightweight rules.

Put a go/no-go checkpoint after every iteration

Treat each weekly (or biweekly) release like a mini-experiment: did we move the metric, did we learn something real, and is it safe to keep going? If not, you pause and adjust instead of drifting into “permanent pilot” mode.

Define a model risk policy

Nothing heavy, just the essentials that match your security and compliance reality. #

- What data is allowed?

- What can’t be logged?

- When does a human need to review?

- Who owns the decision if something goes wrong?

Having this written down early keeps you from discovering “we can’t ship this” after you’ve already built it.

Set thresholds triggering a rollback

For example: if hallucinations cross a certain rate, if customer complaints spike, if latency jumps, or if the cost per successful outcome goes above your ceiling, you revert to the fallback flow and fix it.

The main goal here is to build trust. Your users should never feel like they’re beta testing something unsafe.

Case Studies and Real-World Patterns

These examples show what works when AI is tied to a clear business outcome and a tight scope.

OTTO (Retail Demand Forecasting)

OTTO used Google Cloud’s TiDE model and Vertex AI to improve forecasting accuracy by up to 30%, reducing inventory costs and improving availability (Google Cloud customer story, OTTO). This is a strong example of a single, measurable outcome tied directly to revenue and cost.

AdVon Commerce (Product Content AI MVP)

AdVon Commerce piloted a tightly scoped genAI MVP to validate measurable impact before scaling: it partnered with Bespin Global to set up a gen AI MVP environment and Proof of Concept using Gemini to automate product-description enhancements for a large retailer’s product listings.

The MVP proved value quickly, AdVon reports outcomes like **+**30% top search-rank placements, +67% average daily sales (a $17M revenue lift in 60 days), and ~41% higher conversions on enhanced product detail pages versus comparable items.

The AI-MVP pattern here is pretty straightforward: one high-friction workflow (PDP content quality), existing model leverage (Gemini/Vertex AI), and hard KPIs (rank, conversion, sales).

From idea to AI MVP, and what it takes to ship safely

AI MVP development only works when you can move fast and trust the foundations underneath. If you want to take the approach in this guide and turn it into a real, measurable MVP, Vodworks can help you do it end to end: we run use case discovery and ROI/feasibility checks, then build a rapid PoC to validate value before you scale.

From there, we assess and strengthen what usually breaks AI MVPs in production, including data quality and structure, infrastructure readiness, skills gaps, and security, compliance, and governance.

If you need hands-on delivery, we also implement the foundations like data architecture and pipelines, data cleansing, MLOps, and audit-ready controls.

Book a 30-minute discovery session with our AI solution architect where we discuss the current state of your data estate and AI use cases you plan to implement.

Talent Shortage Holding You Back? Scale Fast With Us

Frequently Asked Questions

How do you handle different time zones?

With a team of 150+ expert developers situated across 5 Global Development Centers and 10+ countries, we seamlessly navigate diverse timezones. This gives us the flexibility to support clients efficiently, aligning with their unique schedules and preferred work styles. No matter the timezone, we ensure that our services meet the specific needs and expectations of the project, fostering a collaborative and responsive partnership.

What levels of support do you offer?

We provide comprehensive technical assistance for applications, providing Level 2 and Level 3 support. Within our services, we continuously oversee your applications 24/7, establishing alerts and triggers at vulnerable points to promptly resolve emerging issues. Our team of experts assumes responsibility for alarm management, overseas fundamental technical tasks such as server management, and takes an active role in application development to address security fixes within specified SLAs to ensure support for your operations. In addition, we provide flexible warranty periods on the completion of your project, ensuring ongoing support and satisfaction with our delivered solutions.

Who owns the IP of my application code/will I own the source code?

As our client, you retain full ownership of the source code, ensuring that you have the autonomy and control over your intellectual property throughout and beyond the development process.

How do you manage and accommodate change requests in software development?

We seamlessly handle and accommodate change requests in our software development process through our adoption of the Agile methodology. We use flexible approaches that best align with each unique project and the client's working style. With a commitment to adaptability, our dedicated team is structured to be highly flexible, ensuring that change requests are efficiently managed, integrated, and implemented without compromising the quality of deliverables.

What is the estimated timeline for creating a Minimum Viable Product (MVP)?

The timeline for creating a Minimum Viable Product (MVP) can vary significantly depending on the complexity of the product and the specific requirements of the project. In total, the timeline for creating an MVP can range from around 3 to 9 months, including such stages as Planning, Market Research, Design, Development, Testing, Feedback and Launch.

Do you provide Proof of Concepts (PoCs) during software development?

Yes, we offer Proof of Concepts (PoCs) as part of our software development services. With a proven track record of assisting over 70 companies, our team has successfully built PoCs that have secured initial funding of $10Mn+. Our team helps business owners and units validate their idea, rapidly building a solution you can show in hand. From visual to functional prototypes, we help explore new opportunities with confidence.

Are we able to vet the developers before we take them on-board?

When augmenting your team with our developers, you have the ability to meticulously vet candidates before onboarding. We ask clients to provide us with a required developer’s profile with needed skills and tech knowledge to guarantee our staff possess the expertise needed to contribute effectively to your software development projects. You have the flexibility to conduct interviews, and assess both developers’ soft skills and hard skills, ensuring a seamless alignment with your project requirements.

Is on-demand developer availability among your offerings in software development?

We provide you with on-demand engineers whether you need additional resources for ongoing projects or specific expertise, without the overhead or complication of traditional hiring processes within our staff augmentation service.

Do you collaborate with startups for software development projects?

Yes, our expert team collaborates closely with startups, helping them navigate the technical landscape, build scalable and market-ready software, and bring their vision to life.

Our startup software development services & solutions:

- MVP & Rapid POC's

- Investment & Incubation

- Mobile & Web App Development

- Team Augmentation

- Project Rescue

Subscribe to our blog

Related Posts

Get in Touch with us

Thank You!

Thank you for contacting us, we will get back to you as soon as possible.

Our Next Steps

- Our team reaches out to you within one business day

- We begin with an initial conversation to understand your needs

- Our analysts and developers evaluate the scope and propose a path forward

- We initiate the project, working towards successful software delivery