12 Best Data Infrastructure Companies for 2026 with Pricing and Client Reviews

December 19, 2025 - 16 min read

Organisations race to move from AI experiments to production-grade products and the demand for a scalable and reliable data foundation has never been higher. It’s no longer enough to have data on the dashboard; teams must be able to quickly move, process, secure, and train models with it.

However, the foundation is only as strong as the standards it upholds. In this article, we’ll review top data infrastructure companies helping organizations establish the resilient backbone necessary to power modern AI use cases

What are Data Infrastructure Companies?

Data infrastructure companies provide the foundational utilities, such as the hardware, managed services, and software, that allow data to be collected, stored, processed, and accessed across an entire organization.

These providers solve the problem of complexity. They abstract away the difficulties of managing global servers, networking, and massive database clusters, allowing engineering teams to focus on building rather than maintaining the pipeline.

Our Focus: The Foundation of the Ecosystem

Modern data infrastructure is a multi-layered ecosystem. In this article, we shifted our focus away from isolated tools that serve one purpose and decided to look at platforms and technologies that form the base of a data stack.

If you want to zoom in on the specific components of the data stack, explore our deep dives into data pipeline tools and the essential data quality tools.

By focusing on the foundational techstack, we aim to help leaders understand strategic partners that provide long-term stability and scalability required for AI implementation. To provide a clear overview of this landscape we have divided the industry leaders into four categories:

- Cloud Platforms and Hyperscalers: The massive, all-in-one ecosystems providing the raw compute and storage power of the internet.

- Data Platforms: Unified environments designed to manage the data lifecycle, from ingestion and transformation to warehouse-scale analytics.

- AI Enablers: Specialized providers offering the high-performance hardware and unique datasets required to fuel artificial intelligence and machine learning.

- Data Center Providers: The physical backbone of the industry, offering the secure facilities and interconnections where the digital world resides.

Data Infrastructure Companies: Cloud and Hyperscale Providers

Data Infrastructure Companies: Cloud and Hyperscale Providers

In this section, we’ll explore massive public cloud providers that supply the foundational compute, storage, and networking capabilities that power modern data stacks. Hyperscalers offer vast, integrated ecosystems of managed services that allow companies to scale data infrastructures with built-in security and elasticity.

Amazon Web Services (AWS)

Amazon Web Services (AWS) launched in 2006 and has grown into the world’s dominant public-cloud provider, operating a global network of 100+ data centres. Its core platform combines compute, storage, databases, and analytics so engineering teams can assemble scalable, secure, and cost-effective foundations for modern data platforms, a key reason AWS is consistently shortlisted among top data infrastructure companies.

Amazon Web Services (AWS) launched in 2006 and has grown into the world’s dominant public-cloud provider, operating a global network of 100+ data centres. Its core platform combines compute, storage, databases, and analytics so engineering teams can assemble scalable, secure, and cost-effective foundations for modern data platforms, a key reason AWS is consistently shortlisted among top data infrastructure companies.

At a building-block level, AWS spans

- Elastic (EC2) and event-driven serverless (Lambda) compute

- Durable object storage (S3 with multiple classes)

- Managed databases (RDS, DynamoDB)

- Cloud data warehousing (Redshift).

For analytics and AI AWS offers the following services:

- Athena (SQL on S3)

- Glue (serverless ETL and cataloging)

- QuickSight (BI)

- SageMaker (ML lifecycle)

They provide a coherent set of data infrastructure solutions for both analytics and machine learning.

For leaders evaluating data infrastructure software and operating models, AWS typically works best when paired with disciplined governance and FinOps guardrails—so the flexibility that accelerates delivery doesn’t become a cost and risk liability at scale.

Key strengths

- Unmatched scalability and global reach: AWS can scale infrastructure up or down on demand, with EC2 provisioning in minutes and Lambda scaling automatically for event workloads.

- Comprehensive ecosystem: Broad coverage across compute, storage, databases, analytics, ML and DevOps, with integration points that support end-to-end pipelines

- Reliability and high availability: S3 is positioned as an eleven-9s durability layer, with multi-AZ architecture designed for high uptime.

- Security and compliance: Built-in encryption, DDoS protection, MFA, and compliance posture (ISO, SOC, GDPR), plus automated patching/backups in managed services like RDS.

- Cost optimisation tooling: Multiple pricing models plus Trusted Advisor, Cost Explorer, and S3 Intelligent-Tiering to help right-size and reduce storage spend over time.

Ideal use case

AWS is a strong fit for large enterprises and high-growth organisations building cloud-native applications, global digital products, and data-heavy analytics/AI initiatives, especially where elasticity, geographic reach, and service breadth matter more than standardisation on a single “all-in-one” suite.

Among big data infrastructure companies, AWS stands out when teams want to compose a tailored data platform (lake, warehouse, streaming, ML) from modular services, provided the organisation invests in operating discipline (security baselines, account governance, and cost controls) early.

Pricing model

AWS uses consumption-based pricing with on-demand, reserved, and spot options. Examples include EC2 on-demand starting around $0.01/hour for entry-level instances, and Amazon S3 Standard at $0.023/GB-month for the first 50 TB (with lower-cost tiers such as Standard-IA and Glacier options down to $0.00099/GB-month for Deep Archive).

For a more detailed estimate that counts in the services you are planning to use refer to AWS pricing calculator.

Customer reviews and considerations

On G2, AWS is rated 4.4/5 (17,000+ reviews), with reviewers highlighting scalability, global reach, pricing flexibility, and security.

Concerns centre on a steep learning curve and pricing complexity. These factors can slow adoption and complicate cost predictability without experienced cloud architects and strong governance.

AWS remains a benchmark among Data infrastructure companies because it enables fast, resilient, secure modernisation, while rewarding organisations that treat cloud adoption as an operating-model change, not just a technology purchase.

Microsoft Azure

Microsoft Azure is Microsoft’s cloud platform and one of the most comprehensive data infrastructure platforms for enterprises building cloud-scale, governed analytics and AI. Azure’s portfolio spans managed relational databases (Azure SQL Database, Azure SQL Managed Instance, SQL Server on Azure VMs), data integration (Azure Data Factory), unified analytics (Azure Synapse Analytics), Spark-based engineering and ML (Azure Databricks), and globally distributed NoSQL (Azure Cosmos DB).

Microsoft Azure is Microsoft’s cloud platform and one of the most comprehensive data infrastructure platforms for enterprises building cloud-scale, governed analytics and AI. Azure’s portfolio spans managed relational databases (Azure SQL Database, Azure SQL Managed Instance, SQL Server on Azure VMs), data integration (Azure Data Factory), unified analytics (Azure Synapse Analytics), Spark-based engineering and ML (Azure Databricks), and globally distributed NoSQL (Azure Cosmos DB).

Azure lets teams standardize a secure data platform while selecting fit-for-purpose engines per workload, transactional, analytical, streaming, and AI, without fragmenting governance.

Azure’s global footprint (70+ regions and 400+ datacenters) and broad compliance portfolio are designed for multinational scale, regulated industries, and data-residency demands.

Key strengths

- Breadth of engines for modern architectures: Azure offers managed SQL, serverless integration, unified analytics (Spark/AI via Databricks), and globally distributed NoSQL for strong data infrastructure solutions.

- Elasticity with consumption economics: Serverless and autoscaling (e.g., Data Factory, Cosmos DB) allow scaling up for peaks and down for efficiency, a practical lever for cost governance.

- AI-ready building blocks: Cosmos DB's vector/hybrid search and Synapse/Databricks integration support generative-AI use cases, accelerating development without fragile point tools.

Ideal use case

Azure is best suited for enterprises and high-growth organizations that need a globally deployable data stack with strong compliance requirements, tight security controls, and integrated analytics/AI.

It is a particularly natural choice for organizations already standardized on Microsoft where shared identity, governance, and operational tooling can reduce integration friction and speed execution.

Pricing model

Azure pricing is primarily consumption-based, with levers for optimization:

- Pay-as-you-go and serverless: Data Factory and Cosmos DB support consumption patterns; Azure SQL offers provisioned and serverless options.

- Commitments and reservations: Reserved capacity and pre-purchase (e.g., Databricks commit units) can reduce unit costs for predictable workloads; Azure Hybrid Benefit can lower migration costs where existing licenses apply.

Azure provides a pricing calculator and limited free tiers/trials.

Ecosystem and integrations

Azure’s integration story is a major reason it competes strongly with other big data infrastructure companies:

- Connectivity and ingestion: Data Factory includes 90+ connectors across on-prem, multicloud, and SaaS sources (including major warehouses and business apps).

- Analytics and BI workflow: Synapse integrates with Power BI and ML services; Databricks integrates broadly across Azure services and governance via Unity Catalog.

- Governance and security: Azure Databricks’ Unity Catalog emphasizes centralized governance, auditing, and lineage, critical for regulated analytics and cross-domain AI adoption.

Customer reviews and considerations

On G2, Azure Cloud Services holds an overall rating of 4.3 out of 5 stars based on 73 reviews.

Reviewers value Azure’s breadth and its integration with Microsoft tools, plus strong perceived security and scalability.

However, users also point out several areas for improvement:

- Some users report inconsistent customer service and occasional data loss or downtime, noting that it took weeks to restore a missing file. Others mention that services can be unstable on free plans.

- Reviewers mention that Azure’s pricing can be complex and that navigating its vast array of services is overwhelming for newcomers. Small‑business users also note that integration is complex and the platform can be expensive.

Is your cloud stack AI-ready? Get an infrastructure audit with our experts today.

Google Cloud Platform (GCP)

Google Cloud Platform (GCP) is Alphabet’s hyperscale public cloud and one of the most consequential data infrastructure companies for enterprises standardising on serverless analytics. GCP provides a fully managed foundation for building pipelines and analytics.

Google Cloud Platform (GCP) is Alphabet’s hyperscale public cloud and one of the most consequential data infrastructure companies for enterprises standardising on serverless analytics. GCP provides a fully managed foundation for building pipelines and analytics.

At the core is BigQuery, a serverless data warehouse that separates storage from compute, so teams can scale independently and avoid cluster management. Data is stored in a columnar format, replicated across locations for high availability, and supports both batch and streaming ingestion.

For many organisations comparing data infrastructure companies, GCP stands out as a cohesive data platform:

- Cloud Storage for durable object storage

- Dataflow for unified batch/stream processing (built on Apache Beam)

- Pub/Sub for event ingestion

This suite of tools is designed to autoscale with demand and minimise operational overhead.

Key strengths

- Serverless by default. BigQuery and Dataflow automatically handle infrastructure, scaling, and maintenance, reducing time spent on provisioning and patching and accelerating delivery.

- AI-ready ecosystem for the next operating model. BigQuery includes built-in ML and integrates with Gemini/Vertex AI, enabling organisations to easily add analytics and AI on top of the same governed data foundation.

- Hybrid and multicloud flexibility. BigQuery can query external sources (including Cloud Storage and Google Sheets) via federated queries, which is useful for multi-region and multi-cloud strategies.

- Security and governance controls. IAM offers fine-grained access control across services, and Cloud Storage supports encryption at rest with customer-managed keys.

Ideal use case

GCP is best for organisations that want elastic, global-scale analytics with real-time pipelines, ML workflows, and microservices, without managing infrastructure. It is particularly attractive where AI-driven decision-making, data residency, or multi-cloud execution are strategic priorities.

This makes it a pragmatic choice among data infrastructure companies for teams who need agility during turbulent loads (scale up for peak demand, scale down when workloads soften).

Pricing model

GCP largely follows pay-as-you-go pricing across services. BigQuery offers on-demand (bytes processed) and capacity-based options, plus a free tier and sandbox for low-risk evaluation. Cloud Storage, Pub/Sub, Dataflow, and Cloud Run also include free allowances, with usage-based pricing thereafter, helpful for cost-to-value alignment.

For an initial guesstimate, you can refer to Google Cloud’s pricing calculator.

Ecosystem and integrations

GCP’s core data infrastructure solutions span ingestion (Pub/Sub), processing (Dataflow), storage (Cloud Storage), analytics (BigQuery), and serverless runtime (Cloud Run). Pub/Sub integrates natively with Dataflow for exactly-once stream processing, and Cloud Run scales to zero to eliminate idle costs—useful patterns for modern, event-driven data infrastructure software architectures.

Customer reviews and considerations

G2 reviews reflect strong market confidence in GCP’s serverless approach: BigQuery is rated 4.5/5 (~1,200 reviews), with praise for pay-as-you-go pricing and ML/AI integrations, alongside concerns about cost management for heavy real-time or ad-hoc usage and a learning curve for advanced features.

Cloud Storage is rated 4.6/5 (~590 reviews) for reliability and integrations, with some feedback that pricing options and regional variance can be confusing.

Cloud Run is rated 4.6/5 (223 reviews) for ease of deployment and cost optimisation, with noted challenges around VPC networking, cold starts for heavy images, and observability gaps.

Overall, among data infrastructure companies and even the largest big data infrastructure companies, GCP’s differentiator is a tightly integrated, serverless operating model that helps enterprises modernise faster, provided they invest early in cost controls, governance, and platform skills.

IBM Cloud

IBM Cloud is a hybrid cloud platform built for regulated enterprises running mission-critical workloads, designed to operate consistently across public cloud, private environments, and on-prem infrastructure. It combines core compute, storage, and networking with higher-level services (databases, messaging, AI/ML via watsonx, and DevOps tooling), with a clear emphasis on security, compliance, reliability, and disaster recovery.

IBM Cloud is a hybrid cloud platform built for regulated enterprises running mission-critical workloads, designed to operate consistently across public cloud, private environments, and on-prem infrastructure. It combines core compute, storage, and networking with higher-level services (databases, messaging, AI/ML via watsonx, and DevOps tooling), with a clear emphasis on security, compliance, reliability, and disaster recovery.

For teams evaluating data infrastructure companies, IBM Cloud’s differentiator is “hybrid by design”: it supports workloads across x86, IBM Power, and zSeries, and uses Red Hat OpenShift and Kubernetes to manage containers across on-prem and IBM data centers. It spans 60+ data centers across multizone regions to support high availability and resilience.

Data-infrastructure products

IBM Cloud’s data infrastructure solutions cover both foundational infrastructure and managed services:

- Compute: Bare Metal Servers (single-tenant, high-control configurations, including optional NVIDIA GPUs and SAP/VMware certification) and Virtual Servers (VPC) for elastic compute.

- Storage: Object Storage with multi-region replication, encryption, and “14 nines” durability, plus immutable retention options for ransomware protection and high-speed transfer via Aspera.

- Databases: Fully managed DBaaS including PostgreSQL, MySQL, MongoDB, Redis, Db2, Cloudant, and Elasticsearch, with built-in HA, backups, and point-in-time recovery; encryption uses IBM Key Protect or Hyper Protect Crypto Services.

- Streaming: Event Streams (managed Apache Kafka) with 99.99% availability across multi-zone regions and enterprise features like schema registry and Kafka Connect.

Key strengths

- Security and compliance for regulated industries: Encryption, role-based access, and certifications (HIPAA, PCI-DSS, SOC 2, ISO 27001).

- Hybrid deployment flexibility at enterprise scale: Multi-architecture support and consistent container ops with OpenShift/Kubernetes to reduce lock-in.

- AI-ready data foundation: Durable object storage, managed databases, Kafka for analytics/lakehouse, and watsonx for AI/ML readiness.

- Broad ecosystem integrations: Integrations with Terraform, OpenShift, VMware, SAP, and DevOps tools for operational consistency.

Ideal use case

IBM Cloud fits enterprises in financial services, healthcare, and government that need compliant infrastructure for mission-critical systems (including SAP) and modern analytics/AI workloads, especially when hybrid operations are non-negotiable.

Pricing model

IBM Cloud offers flexible consumption models, including a free tier, plus pay-as-you-go billing and reserved capacity options (1- or 3-year terms) for more predictable workloads.

It also promotes enterprise commitments (Enterprise Savings Plan) and targeted discounts, including object storage promotions (as low as USD 10/TB per month under a One-Rate plan) and discounts on selected bare-metal/GPU servers.

For an initial estimate you can use IBM’s Cloud cost calculator.

Ecosystem and integrations

Beyond infrastructure services, IBM Cloud emphasizes a full stack approach: container orchestration, managed databases, streaming, security services, and multi-cloud management, with integrations across common enterprise tooling (Terraform, OpenShift, VMware, SAP).

For buyers assessing various data infrastructure companies, this breadth can simplify vendor choice, if your organization values fewer strategic platforms over a patchwork of point tools.

Customer reviews and considerations

IBM Cloud holds a 4.5/5 rating on Capterra from 29 verified reviews. G2 ratings vary by product; IBM Cloud Virtual Servers score 3.8/5 (55 reviews) and Bare Metal Servers score 4.0/5 (32 reviews)

Reviewers cite strong security, encryption/MFA, and broad options/integrations; some also note always-on service operation and available tutorials.

In terms of watch-outs, buyers frequently flag catalog/pricing complexity, occasional downtime, limited regional availability, and a UI/documentation experience that can slow troubleshooting (including logs accessed via web console).

IBM Cloud is a strong contender among data infrastructure companies when regulated operations, hybrid control, and enterprise-grade resilience are the top buying criteria.

Build a scalable AI foundation. Book a consultation to discuss your AI use cases and map out next steps.

Data Infrastructure Companies: Data Platforms

This category features specialized environments that abstract away underlying infrastructure to focus on the data life cycle, from ingestion to governance. These platforms enable organizations to store, transform, and act on massive datasets using unified architectures like the data lakehouse or real-time event streams.

Snowflake

Snowflake’s AI Data Cloud is one of the most influential platforms among modern data infrastructure companies, evolving from a cloud-native data warehouse into a unified environment that combines secure storage, elastic compute, AI services, and a global data-sharing layer.

Snowflake’s AI Data Cloud is one of the most influential platforms among modern data infrastructure companies, evolving from a cloud-native data warehouse into a unified environment that combines secure storage, elastic compute, AI services, and a global data-sharing layer.

A primary differentiator is its hybrid architecture: storage and compute are decoupled and billed per second, enabling teams to dynamically scale up and decrease compute to optimize costs.

Key strengths

- Fully managed SaaS foundation eliminates hardware/software management, focusing effort on insights, reliability, and predictable governance.

- Decoupled storage and compute clusters allows right-sizing by workload, improving cost control.

- Cortex provides LLM-based summarization and Q&A over unstructured content; Snowflake ML/Snowpark supports native Python/Java/Scala model development.

- Horizon ensures security/access/compliance; Snowgrid enables risk-free cross-cloud sharing and replication without data copying.

- Broad connectivity (JDBC/ODBC, Spark), multi-table support (Iceberg), and partner marketplace for enterprise scale.

Ideal use case

Snowflake is a strong fit for enterprises seeking a scalable, cloud-agnostic data platform that consolidates large volumes of structured and semi-structured data while supporting analytics, AI/ML, and application workloads in one environment.

It’s particularly relevant where business value depends on cross-region collaboration, faster time-to-insight, and reducing fragmentation across teams.

Pricing model

Snowflake uses a consumption model across compute, storage, and cloud services.

- Compute is billed per second (minimum 60 seconds) and only accrues while warehouses run

- Storage is charged per TB per month

- Governance/optimization “cloud services” are included up to a threshold before additional billing applies.

Cross-region/cross-cloud data transfer can also add to running costs.

Snowflake uses a credit-based consumption model, where the price per credit is determined by the selected plan. A single credit costs $2 for the Starter plan, $3 for the Enterprise plan, and $4 for the Business Critical plan.

Ecosystem and integrations

Snowflake integrates with common drivers and connectors (including Python and Spark) and with third-party ETL/BI tools, while supporting multiple languages (SQL, Python, Java, Scala). This breadth reduces adoption friction and helps standardize the data platforms portfolio without forcing teams into a single tooling path.

Customer reviews and considerations

On G2, Snowflake is rated 4.6/5, with the bulk of sentiment clustering in four- and five-star reviews. Users frequently cite performance/scalability benefits tied to the separation of storage and compute, and highlight ease of integration with BI tools plus secure sharing with partners.

Common concerns center on cost management (especially when warehouses aren’t sized/suspended effectively), performance challenges with many small files, and the reality that newer capabilities, such as Cortex AI, are still maturing.

Databricks

Databricks is a unified data-and-AI data platform built on a “lakehouse” architecture that blends the low-cost, open-format flexibility of data lakes with the performance and governance typically associated with data warehouses.

Databricks is a unified data-and-AI data platform built on a “lakehouse” architecture that blends the low-cost, open-format flexibility of data lakes with the performance and governance typically associated with data warehouses.

The company positions this approach as a way to reduce costs and accelerate delivery of analytics and AI initiatives. That’s one of the major reasons it’s frequently shortlisted among data infrastructure companies modernizing enterprise foundations.

At the product level, Databricks centers on a few tightly integrated pillars:

- Lakeflow for end-to-end ingestion, transformation, orchestration, and observability of pipelines (including real-time streaming);

- Lakehouse Storage for managed tables and open table formats (e.g., Delta Lake and Iceberg);

- Databricks SQL for serverless warehousing on the Photon engine with broad BI connectivity;

- Unity Catalog for unified governance, lineage, and policy enforcement across data and AI assets.

Key Strengths

- Lakehouse standardization with open technologies: Databricks' model uses open-source (Spark, Delta Lake, MLflow) and open formats to reduce lock-in risk.

- Pipeline execution + operational control in one place: Lakeflow offers ingestion, incremental processing, medallion ETL, streaming, orchestration, and observability.

- High-performance warehousing with BI and AI hooks: Databricks SQL emphasizes serverless elasticity, Photon price/performance, BI tool low-latency connections, and embedded AI.

- Governance designed for scale and collaboration: Unity Catalog provides consistent discovery, access controls, lineage, compliance, and quality monitoring across clouds.

Ideal use case

Databricks fits organizations consolidating fragmented data infrastructure solutions into a single operating model for batch + streaming engineering, warehousing, and ML/AI. It’s also a strong match for enterprises that want to use GenAI agents and applications without stitching together multiple data platforms and policy layers.

Pricing model

Databricks uses pay-as-you-go, per-second consumption with optional committed-use discounts.

Pricing is based on Databricks Units (DBUs) that vary by product and capability. The platform has different multipliers for serverless features and warehouse-size-based DBU rates for SQL serverless, plus separate DBU ranges for GPU model serving.

Databricks cost might be highly elastic, but requires research and financial discipline to avoid cost surprises at scale. Use Databricks pricing calculator to get an initial estimate.

Ecosystem and integrations

Databricks’ strategy is “open by design”: data remains in open table formats, with interoperability across technologies and clouds. The platform supports Python and SQL workflows across notebooks/IDEs and batch/streaming execution, connects to external catalogs (including AWS Glue and Snowflake via “foreign tables”), and integrates with mainstream BI tools such as Power BI and Tableau for analyst consumption.

Customer reviews and considerations

G2 reviewers rate Databricks highly, 4.6/5 on G2 based on 627 reviews, frequently citing scalability, fast cluster provisioning, and reduced operational burden. Databricks company overview

Reported trade-offs include UI sluggishness during heavy notebook usage, a learning curve for cluster configuration, and concerns about pricing clarity and documentation for advanced capabilities.

Build a future-proof data stack. Book a call to discuss your data use case with our expert.

Confluent

Confluent is one of the most visible data infrastructure companies in real-time streaming, founded by the original creators of Apache Kafka and positioned as an enterprise data-streaming platform. It aims to replace fragile point-to-point and batch/streaming pipelines with a single, governed system that “cleans data at the source” and delivers real-time signals to AI/ML systems, with multi-cloud deployment across 60+ regions.

Confluent is one of the most visible data infrastructure companies in real-time streaming, founded by the original creators of Apache Kafka and positioned as an enterprise data-streaming platform. It aims to replace fragile point-to-point and batch/streaming pipelines with a single, governed system that “cleans data at the source” and delivers real-time signals to AI/ML systems, with multi-cloud deployment across 60+ regions.

At its core, Confluent Cloud provides a fully managed, serverless Kafka service across AWS, Azure, and GCP, while Confluent Platform supports self-managed deployments for on-prem/private cloud needs, providing teams with a consistent real-time foundation as they modernize their data infrastructure solutions.

Beyond core streaming, Confluent’s portfolio emphasizes interoperability and governance: 120+ pre-built connectors (including CDC options for Oracle, DynamoDB, and Salesforce), Stream Governance for schema registry and data contracts, and managed Apache Flink for stateful stream processing using SQL/Python/Java.

Key strengths

-

Serverless Kafka with elastic autoscaling, usage-based pricing, and multi-cloud coverage (60+ regions), cutting operational drag.

-

120+ managed connectors, including CDC for major operational systems, reduce time-to-value for legacy integration.

-

Stream Governance provides schema registry, data contracts, validation, encryption, plus catalog, portal, and lineage.

-

Tableflow converts topics to Iceberg/Delta tables (no ETL), claiming 30–50% lower cost for faster analytics and AI.

-

Managed Flink is integrated with Kafka; an AI portfolio delivers live context to LLMs and orchestrates streaming agents.

Ideal use case

Confluent is best suited to organizations building real-time, event-driven architectures, especially those integrating many sources, migrating from legacy systems, or developing AI/ML pipelines. It’s a strong fit for microservices and high-throughput streaming environments, and for teams that need governed, trustworthy context feeding gen-AI workflows (not just batch snapshots).

Pricing model

Confluent Cloud is usage-based, measured in elastic Confluent Kafka Units (eCKUs) plus data in/out and storage.

Pricing tiers include Basic (starting at $0/month), Standard (~$385/month starting), Enterprise (~$895/month starting), and a Freight specialty tier (~$2,300/month starting) for high-scale logging/observability patterns.

Managed connectors, Stream Governance, and Flink processing are billed separately based on throughput/usage.

Confluent’s price estimator allows you to roughly assess cost savings compared to native Apache Kafka.

Ecosystem and integrations

Confluent’s integration approach centers on managed connectors (databases, warehouses, lakes, SaaS) configured via UI or API, with CDC support for common enterprise systems. The library lists over 120 connectors.

For analytics consumption, Tableflow supports open table formats (Iceberg/Delta) and notes partnerships with platforms such as Databricks and Snowflake.

Customer reviews and considerations

G2 reviewers rate Confluent 4.4/5 (112 reviews), highlighting simplified Kafka/Flink operations, a broad connector set, and productivity wins for real-time pipelines and microservices.

Primary concerns are cost scaling, with some features only available on Enterprise plan, plus a learning curve and documentation gaps.

MongoDB

MongoDB is a developer data platform that grew out of its open-source document database and has matured into a multi-cloud foundation used by more than 50,000 customers, including over 75% of the Fortune 100.

MongoDB is a developer data platform that grew out of its open-source document database and has matured into a multi-cloud foundation used by more than 50,000 customers, including over 75% of the Fortune 100.

MongoDB’s JSON-like BSON document model supports rapid product development without the friction of rigid schemas or frequent migrations.

MongoDB Atlas is the flagship managed service, running across AWS, Azure, and Google Cloud with operational controls such as backup, monitoring, security, and autoscaling.

A differentiator is how the platform increasingly adds “extra” components into the core data platform, including integrated full-text search, vector search, and stream processing, reducing integration overhead and operational risk.

Notably, MongoDB announced in September 2025 that search and vector search capabilities are available beyond Atlas in Community Edition and Enterprise Server, expanding these features for self-managed adopters.

Prepare your data infrastructure with a scalable, dbt-driven transformation layer and CI guardrails. Book a data readiness consultation with Vodworks engineers.

Key strengths

- Schema flexibility: BSON docs and a powerful query/aggregation framework accelerate model development.

- Built-in AI retrieval primitives: Native full-text and vector search support semantic/hybrid retrieval for RAG and AI agents, eliminating data movement.

- Unified platform for data access: Atlas handles operational, streaming (via Kafka integration), and federated querying (across databases/object storage).

- Strong ecosystem leverage: Integrations with tooling like Airflow, Kubernetes, and AI partners (LangChain, LlamaIndex) accelerate time-to-value.

Ideal use case

MongoDB fits organizations building data-intensive applications that handle semi-structured or unstructured data and need rapid iteration, especially AI-powered services, real-time analytics, and event-driven microservices that benefit from built-in search, vector search, and stream processing.

Pricing model

MongoDB Atlas uses pay-as-you-go pricing tied to cluster tier, storage, and compute. The free M0 tier provides a shared cluster with 512 MB storage; shared M2 (2 GB) and M5 (5 GB) tiers are listed at about $9/month and $25/month.

Flex clusters start at roughly $0.011/hour for development/testing, while dedicated clusters start around $0.08/hour for an M10 and scale upward through larger tiers.

Community Edition remains free, and Enterprise Advanced (on-prem) is custom-priced.

For a more detailed estimate, you can use MongoDB’s pricing calculator.

Ecosystem and integrations

Beyond Atlas, MongoDB provides operational tooling such as Compass (GUI), third-party integrations, and a Relational Migrator for moving from relational systems.

For self-managed environments, Enterprise Advanced includes an Ops Manager and a Kubernetes operator.

This breadth makes MongoDB relevant among big data infrastructure companies that must support both developer velocity and enterprise control.

Customer reviews and considerations

MongoDB holds a 4.5/5 overall rating on G2 based on 500+ reviews.

Reviewers frequently praise the ease of spinning up clusters and the flexibility of storing unstructured JSON-style data.

Reported concerns include a learning curve, potential performance issues if indexing is not managed well, and added complexity in the aggregation framework versus traditional SQL patterns.

Turn fragmented data pipelines into engine that fuels your AI use cases. Speak with a Vodworks solution architect.

Data Infrastructure Companies: AI Enablers

AI Enablers is a special category that provides raw materials, such as ethically sourced datasets and high-density GPU compute, necessary to power AI use cases. These companies address the specific bottlenecks of AI development, including massive data acquisition and the extreme hardware requirements of model training.

Bright Data

Bright Data is a web-data and proxy provider that positions itself as an all-in-one platform for web data, proxy, and AI infrastructure, helping teams collect structured real-time or historical data from public websites at petabyte scale, without being derailed by bot detection.

Bright Data is a web-data and proxy provider that positions itself as an all-in-one platform for web data, proxy, and AI infrastructure, helping teams collect structured real-time or historical data from public websites at petabyte scale, without being derailed by bot detection.

It delivers data in formats such as JSON, HTML, or Markdown and states it’s trusted by 20,000+ customers, from AI start-ups to Fortune 500 enterprises.

Bright Data stands out because it bundles “access” and “acquisition” into a managed layer: Web Unlocker (anti-bot, CAPTCHA handling, proxy rotation), Crawl and SERP APIs, and hosted browser automation for JavaScript-heavy sites.

These features are complemented with pre-built scrapers, a dataset marketplace, and a web archive offering large-scale historical access.

Bright Data functions as a shortcut to modern data infrastructure solutions. Instead of building and maintaining custom scrapers and proxy operations, users can programmatically render JavaScript, rotate proxies, bypass CAPTCHAs, crawl targets, and receive structured output ready for downstream ETL/ML pipelines.

Key strengths

- End-to-end data acquisition stack: Combines Web Access APIs, scrapers, dataset marketplace, and managed services for self-serve or fully managed execution.

- Production workload-ready: Web Unlocker, Browser API, and proxy networks bypass bot defenses with high throughput (HTTP/3/QUIC, unlimited concurrent sessions).

- Global proxy reach: 150M+ ethically sourced residential IPs across 195 countries with geo/ASN targeting for local data reflection.

- Breadth of data options: Access 120+ curated datasets and a web archive with >1PB new data weekly, enabling "now" and "then" analysis.

Ideal use case

Bright Data is best suited to organizations that need reliable, large-scale web data without building their own scraping and proxy infrastructure. The platform is especially relevant to AI teams, e-commerce, market research, financial services, and brand-protection functions feeding analytics pipelines and models.

In a “build vs. buy” comparison, this is a pragmatic pick when speed, continuity, and geographic coverage outweigh the desire to own every component of the stack.

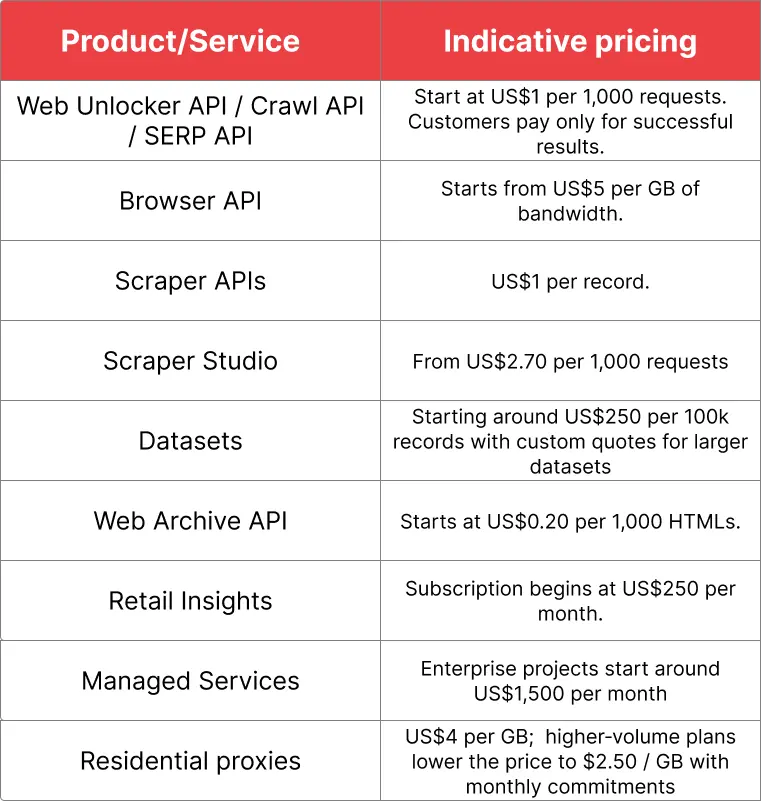

Pricing model

Bright Data’s pricing is modular and usage-based by product. The starting prices are $1 per 1,000 requests for Web Unlocker/Crawl/SERP APIs (pay-only-for-success), $5/GB for Browser API bandwidth, and additional per-record/per-request pricing for scraping and datasets (with enterprise rates available for high-volume proxy usage).

Ecosystem and integrations

Ecosystem and integrations

Bright Data exposes capabilities via APIs that return JSON/HTML/Markdown and integrates with common developer tooling (Python/Node.js and popular browser automation stacks like Puppeteer and Playwright).

It also notes integration with LangChain and LlamaIndex for agentic workflows.

Customer reviews and considerations

On G2, Bright Data holds an overall rating of 4.6 / 5 based on ~277 reviews, with roughly 80% five‑star ratings.

Reviewers highlight dataset variety, strong support, and the value of pre-configured scrapers plus integrated proxy services that reduce setup effort.

Reported concerns include difficulties with downloading large dataset, gaps in pre-built coverage, performance delays during filtering at scale, and occasional friction resolving account issues.

Strategically, Bright Data is a powerful data platform that can enable and accelerate downstream AI operations. However, the breadth introduces some complexity, so buyers should be clear whether they need the full stack or only select services.

CoreWeave

CoreWeave is a U.S.-based cloud provider positioning itself as “the Essential Cloud for AI,” built around bare-metal GPU compute, AI-optimised storage, and low-latency networking for training and high-throughput inference.

CoreWeave is a U.S.-based cloud provider positioning itself as “the Essential Cloud for AI,” built around bare-metal GPU compute, AI-optimised storage, and low-latency networking for training and high-throughput inference.

For leaders comparing data infrastructure companies, CoreWeave stands out less as a general-purpose cloud and more as a specialised performance layer for GPU-heavy workloads, where speed to capacity, predictable performance, and cost efficiency can translate directly into faster model cycles and lower time-to-insight.

At the infrastructure layer, CoreWeave offers access to the latest NVIDIA GPUs (including Blackwell-class options) paired with high-core CPUs and InfiniBand networking, plus managed orchestration via its Kubernetes offering and operational tooling (Mission Control) aimed at improving GPU utilisation and training “good-put.”

Key strengths

- GPU performance and fast allocation: Direct hardware access via bare-metal GPU nodes, offering rapid on-demand access to thousands of GPUs.

- AI-native storage: AI Object Storage uses LOTA caching for up to 7 GB/s per GPU, with encryption and automated snapshots.

- High-speed networking for large clusters: NVIDIA Quantum InfiniBand enables sub-microsecond latency and high bandwidth for large training "megaclusters."

- Managed orchestration and operational control: CoreWeave Kubernetes Service (CKS) provides preconfigured bare-metal K8s with drivers and Slurm integration.

- Cost transparency: Simple per-instance/per-hour pricing bundles infrastructure and offers no-charge internal data transfer, simplifying cost forecasting.

Ideal use case

CoreWeave is ideal for organizations with GPU-intensive AI workloads (LLM training/serving, generative AI, scientific simulation, VFX rendering) where performance and access to frontier GPUs are critical. Among data infrastructure companies, it suits those with platform engineers skilled in Kubernetes, Slurm, and bare-metal operations who seek a specialized foundation rather than a general-purpose cloud.

Pricing model

CoreWeave publishes example on-demand rates across compute, storage, and networking, with the note that discounts and negotiated terms commonly apply:

Reserved capacity and multi-year leases are cited with discounts up to 60% versus on-demand rates.

Reserved capacity and multi-year leases are cited with discounts up to 60% versus on-demand rates.

Customer reviews and considerations

Public reviews are limited (G2 had only a small number of reviews as of late 2025, though those available were highly positive), so buyer insight from rating sites is statistically weak.

One field report highlights potential cost savings versus hyperscalers and emphasises CoreWeave’s access to the latest GPUs and bare-metal performance, but also flags trade-offs: a thinner managed-service ecosystem, a sales-led onboarding motion, and the need for strong in-house infrastructure skills.

The strategic question is whether your organisation wants a specialist performance cloud as a pillar of the data platform, or a single hyperscaler-style environment with broader managed services.

Fuel your AI models with the right data.** Book a call to discuss your data estate**** with our solution architect.**

Honorable Mention: Data Center Operators

This final section examines the physical backbone of the internet: the operators who provide the secure facilities and high-speed interconnections where the cloud actually lives. These providers enable hybrid-cloud strategies by placing enterprise data in close physical proximity to major cloud on-ramps and global carriers.

Digital Realty

Digital Realty is a key data-centre REIT, operating 300+ data centers across 50+ areas, serving 5,000+ customers as a scaled digital transformation partner.

Digital Realty is a key data-centre REIT, operating 300+ data centers across 50+ areas, serving 5,000+ customers as a scaled digital transformation partner.

Using PlatformDIGITAL, it offers secure, carrier-neutral colocation and interconnection to reduce "data gravity" by placing data closer to clouds and users. Its offerings include AI-ready colocation, a comprehensive connectivity stack, and ServiceFabric for managing multi-cloud workflows via one interface.

Key Strengths

- Global scale with high availability (99.999% uptime) in 300+ facilities for multi-region resilience and consistent enterprise capacity planning.

- AI-ready colocation flexibility, from single racks to high-density suites, for faster expansion of compute-intensive AI/HPC workloads.

- Interconnection depth via ServiceFabric® for low-latency, scalable hybrid/multi-cloud connections, improving "speed to data."

Ideal use case

Digital Realty serves enterprises and service providers needing global growth, multi-cloud strategies, or distributed data-exchange hubs, especially for high-density AI. It may be too large or costly for smaller or single-site deployments.

Pricing model

Digital Realty does not publish fixed pricing. Colocation and connectivity are custom-quoted based on location, power density, bandwidth, and contract terms. Analyst commentary characterises pricing as medium-to-high versus regional providers due to global footprint and enterprise-grade service levels.

Customer reviews and considerations

Reviews praise the global scale, reliability, and carrier-neutral interconnection, especially for hybrid and multi-cloud models, along with sustainability and energy efficiency. However, customers warn the offering can be excessive for small deployments, citing higher-than-regional pricing, complex fees, high initial setup costs, slower service, and the risk of vendor lock-in with large datasets and interconnects.

Equinix

Equinix (NASDAQ: EQIX) is the "world’s digital infrastructure company," operating 240+ International Business Exchange (IBX) data centers across 70+ metro areas, serving over 10,000 customers, including many Fortune 500 companies. It provides a global foundation for hybrid-multicloud performance, ecosystem adjacency, and resilience. Core offerings include secure colocation with 24/7 Smart Hands/Smart Build services.

Equinix (NASDAQ: EQIX) is the "world’s digital infrastructure company," operating 240+ International Business Exchange (IBX) data centers across 70+ metro areas, serving over 10,000 customers, including many Fortune 500 companies. It provides a global foundation for hybrid-multicloud performance, ecosystem adjacency, and resilience. Core offerings include secure colocation with 24/7 Smart Hands/Smart Build services.

A key differentiator is Equinix Fabric, a software-defined interconnection platform for secure, lower-latency private connectivity on demand, bypassing the public internet to support hybrid-multicloud and AI workloads.

Equinix also offers digital edge services for distributed operations, such as Network Edge for virtual network functions, Precision Time for highly accurate synchronization (up to 50 µs), and Smart View for real-time monitoring.

Key Strengths

- Global scale, 99.9999% uptime for mission-critical expansion.

- Interconnection via Fabric, multicloud routing with Cloud Router.

- Network Edge for faster, reduced-hardware market entry.

Ideal use case

Equinix is best suited for enterprises building hybrid-multicloud architectures where predictable latency, private connectivity, and security materially impact revenue, customer experience, and compliance outcomes.

Pricing model

Pricing is largely defined by location, bandwidth, and service tier.

Interconnection pricing is typically based on port size and optional packages (including “Unlimited” style packages for fixed monthly virtual circuit use), and Fabric Cloud Router is billed monthly by tier with 12/24/36-month terms.

For internet transit, Equinix Internet Access supports fixed recurring, usage-based, or burst models. For example, a 1 Gbps commitment would cost US$1,000/month on a 10-Gbps port as an example.

Customer reviews and considerations

Public sources show strong satisfaction with reliability, connectivity, and security. Datamation cites consistent feedback around “strong customer focus and high-quality services,” and G2 reports a 4.4/5 average rating across ~20 reviews, with 70% giving five stars.

Key considerations: forum discussions noted that cross-connect installs can sometimes be slow and that pricing may be higher than some alternatives, so buyers should clearly define bandwidth needs, implementation timelines, and term commitments during procurement.

Build a Resilient Data Foundation with Vodworks’ AI Readiness Package

Understanding the landscape of data infrastructure companies is the first step; the second is architecting these powerful platforms into a cohesive, reliable foundation for your organization. At Vodworks, we help businesses navigate this complexity through our structured AI Readiness Package, designed to turn fragmented infrastructure into a scalable engine for growth.

We begin with an infrastructure and use case audit, where our architects pressure-test your current data estate against your AI ambitions. We evaluate computing capacity, cloud environment suitability, and integration capabilities across major providers like AWS, Azure, and GCP to ensure your foundation is built for high-performance workloads.

From there, we design a modern, scalable data architecture and long-term strategy that aligns your technical stack with tangible business value.

Then, our engineers take the lead on the heavy lifting. We build robust data pipelines, implement automated MLOps/DevOps workflows, and establish the governance frameworks needed to ensure your infrastructure is secure, compliant, and observable in production.

Book a 30-minute discovery call with a Vodworks solution architect to discuss your use case and review the data estate.

Talent Shortage Holding You Back? Scale Fast With Us

Frequently Asked Questions

In what industries can Web3 technology be implemented?

Web3 technology finds applications across various industries. In Retail marketing Web3 can help create engaging experiences with interactive gamification and collaborative loyalty. Within improving online streaming security Web3 technologies help safeguard content with digital subscription rights, control access, and provide global reach. Web3 Gaming is another direction of using this technology to reshape in-game interactions, monetize with tradable assets, and foster active participation in the gaming community. These are just some examples of where web3 technology makes sense however there will of course be use cases where it doesn’t. Contact us to learn more.

How do you handle different time zones?

With a team of 150+ expert developers situated across 5 Global Development Centers and 10+ countries, we seamlessly navigate diverse timezones. This gives us the flexibility to support clients efficiently, aligning with their unique schedules and preferred work styles. No matter the timezone, we ensure that our services meet the specific needs and expectations of the project, fostering a collaborative and responsive partnership.

What levels of support do you offer?

We provide comprehensive technical assistance for applications, providing Level 2 and Level 3 support. Within our services, we continuously oversee your applications 24/7, establishing alerts and triggers at vulnerable points to promptly resolve emerging issues. Our team of experts assumes responsibility for alarm management, overseas fundamental technical tasks such as server management, and takes an active role in application development to address security fixes within specified SLAs to ensure support for your operations. In addition, we provide flexible warranty periods on the completion of your project, ensuring ongoing support and satisfaction with our delivered solutions.

Who owns the IP of my application code/will I own the source code?

As our client, you retain full ownership of the source code, ensuring that you have the autonomy and control over your intellectual property throughout and beyond the development process.

How do you manage and accommodate change requests in software development?

We seamlessly handle and accommodate change requests in our software development process through our adoption of the Agile methodology. We use flexible approaches that best align with each unique project and the client's working style. With a commitment to adaptability, our dedicated team is structured to be highly flexible, ensuring that change requests are efficiently managed, integrated, and implemented without compromising the quality of deliverables.

What is the estimated timeline for creating a Minimum Viable Product (MVP)?

The timeline for creating a Minimum Viable Product (MVP) can vary significantly depending on the complexity of the product and the specific requirements of the project. In total, the timeline for creating an MVP can range from around 3 to 9 months, including such stages as Planning, Market Research, Design, Development, Testing, Feedback and Launch.

Do you provide Proof of Concepts (PoCs) during software development?

Yes, we offer Proof of Concepts (PoCs) as part of our software development services. With a proven track record of assisting over 70 companies, our team has successfully built PoCs that have secured initial funding of $10Mn+. Our team helps business owners and units validate their idea, rapidly building a solution you can show in hand. From visual to functional prototypes, we help explore new opportunities with confidence.

Are we able to vet the developers before we take them on-board?

When augmenting your team with our developers, you have the ability to meticulously vet candidates before onboarding. We ask clients to provide us with a required developer’s profile with needed skills and tech knowledge to guarantee our staff possess the expertise needed to contribute effectively to your software development projects. You have the flexibility to conduct interviews, and assess both developers’ soft skills and hard skills, ensuring a seamless alignment with your project requirements.

Is on-demand developer availability among your offerings in software development?

We provide you with on-demand engineers whether you need additional resources for ongoing projects or specific expertise, without the overhead or complication of traditional hiring processes within our staff augmentation service.

Do you collaborate with startups for software development projects?

Yes, our expert team collaborates closely with startups, helping them navigate the technical landscape, build scalable and market-ready software, and bring their vision to life.

Our startup software development services & solutions:

- MVP & Rapid POC's

- Investment & Incubation

- Mobile & Web App Development

- Team Augmentation

- Project Rescue

Subscribe to our blog

Related Posts

Get in Touch with us

Thank You!

Thank you for contacting us, we will get back to you as soon as possible.

Our Next Steps

- Our team reaches out to you within one business day

- We begin with an initial conversation to understand your needs

- Our analysts and developers evaluate the scope and propose a path forward

- We initiate the project, working towards successful software delivery