Why You Need a Data Quality Audit and How to Run One

December 4, 2025 - 16 min read

Data quality audits are the primary defense against bad data and the flawed decisions it causes. While modern data stacks make it easy to ingest and process massive amounts of information, they don't automatically guarantee that information is correct.

For data engineers and analytics experts a data quality audit is a strategic reset. It’s a mechanism to detect invisible pipeline failures, stale data, and broken transformations. By validating the health of your datasets and data infrastructure, teams catch mistakes early, before the cost of an error becomes too high.

If you find yourself questioning the accuracy of a dashboard too often, it’s time for a data quality audit.

Key Takeaways:

- A data quality audit is a systematic review to ensure data is accurate, complete, consistent, and reliable for decision-making.

- A successful audit requires a clear plan and careful preparation. You must define objectives, scope, stakeholders, and the right metrics across six core dimensions of data quality.

- Automated data quality solutions offer significant advantages over manual audits in speed, scalability, and proactivity, providing continuous monitoring instead of periodic checks.

What is a data quality audit?

A data quality audit is a process of reviewing organisations' datasets and data infrastructure for issues. Its ultimate objective is to verify data quality dimensions such as accuracy, relevance, completeness, and others.

What is the purpose of a data audit? They have two specific goals:

- Verify the quality of data at selected departments, programs, or projects.

- Assess the ability of data infrastructure to collect, manage, and provide quality data.

If reviewed data is inaccurate or missing crucial points the data audit helps identify weak links in datasets and pipelines and resolve them quickly.

Internal vs. external data quality audit

There are two ways data quality audits can be conducted in the organisation:

- Internal data audits are often conducted in the form of self assessment. They can be done frequently and modularly, allowing to investigate certain pipelines or datasets without performing a comprehensive analysis of the entire data estate.

- External data audits are usually done as a broader research of the company’s entire dataset. They are performed by external auditors in regulated industries to verify that organisations adhere to data policies or before integrating new systems or technologies, such as AI, to ensure that current data infrastructure will be able to support it.

To conduct internal data audits, teams need to have required data quality tools and establish processes that will allow them to analyse large volumes of data and review current data pipelines.

External data quality audits are more comprehensive. For example, Vodworks AI Readiness Assessment analyses all components of your data stack before AI integration, including datasets, infrastructure, people, and internal processes. This audit not only identifies data inconsistencies, but gives companies a broader picture of whether they are technically and operationally ready for AI adoption, with clear deliverables and an improvement plan.

Why data quality audits matter

According to a recent survey of 750 business leaders, 58% of them say their company’s key decisions are based on inaccurate or inconsistent data most of the time.

Regular data quality audits can significantly improve the quality of the organisation’s datasets and reliability of data pipeline. This, ultimately, makes decisions faster and more reliable.

However, decision-making is the tip of the iceberg. The real changes brought by data quality audits happen at the earlier stage of the process.

Clearer data ownership

Data quality audits often force questions like: Who owns this table? Who reviews alerts? In many cases, the answer will be: No one.

Having data owners across key business units not only facilitates data audits, but also ensures that there’s a certain person managing access to the units’ data, oversees the lifecycle of it, and resolves issues and discrepancies.

Eventually, it leads to lower time to resolution of data issues, better understanding of domain knowledge by data teams, and more overall trust in data.

Less “shadow data” across the organisation

When people repeatedly see that numbers in the dashboard don’t look right, they start pulling data from sources to spreadsheets, notebooks, and other point-solutions where data, and insights generated from it, are invisible to others. This leads to knowledge loss, inconsistencies in metrics definition, security risks, and other consequences data pipelines are designed to prevent.

Regular audits help increase trust in data across the organisation and promote usage of centralised dashboards.

Decreasing alert fatigue

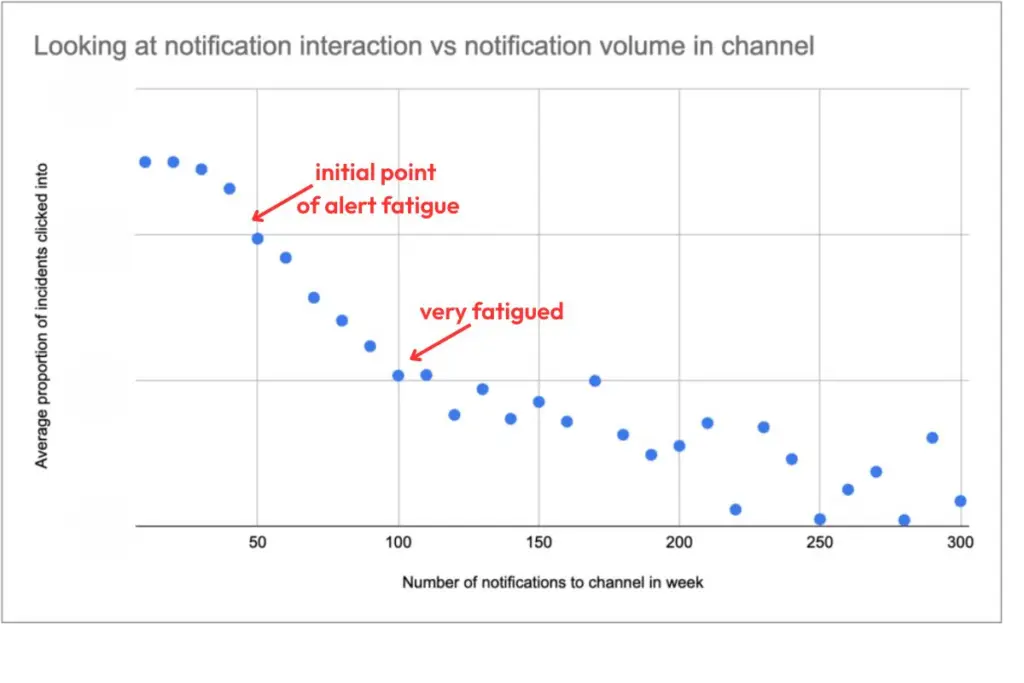

If the data engineering team stops responding to alerts from the data pipeline, data quickly becomes unreliable. Alert fatigue is a real problem: Monte Carlo’s research shows that when the person or team responsible for maintaining data pipelines receives more than 100 incident alerts per week, their incident response rate drops by about 35%.

Research shows that high notification volumes lead to alert fatigue, significantly dropping the incident response rate for data teams.

Research shows that high notification volumes lead to alert fatigue, significantly dropping the incident response rate for data teams.

Many of those alerts may be low severity or triggered by a poorly configured alerting system, but they still have a dangerous side effect: when something truly critical breaks, the person responsible is much more likely to miss it.

Regular data quality audits will help identify and fix broken pipeline workflows as well as adjust the alerting system to minimise alert fatigue.

Cost optimisation in the data platform

While reviewing pipelines and tables, audits often find unused datasets, redundant transformations, or overly expensive jobs.

A recent report shows that up to 50% of enterprise data sits unused, silently draining cloud storage budgets. Deleting just 100 TB of unused data on AWS can save around $27,600 per year in storage alone. And once you factor in all the data jobs running on that data, the true cost multiplies quickly.

Regular data quality audits identify unused tables, redundant jobs, and dashboards that haven’t been used in months, helping cut waste by reducing storage and compute costs while also decreasing the surface area for failures.

When and how often to perform data quality audits?

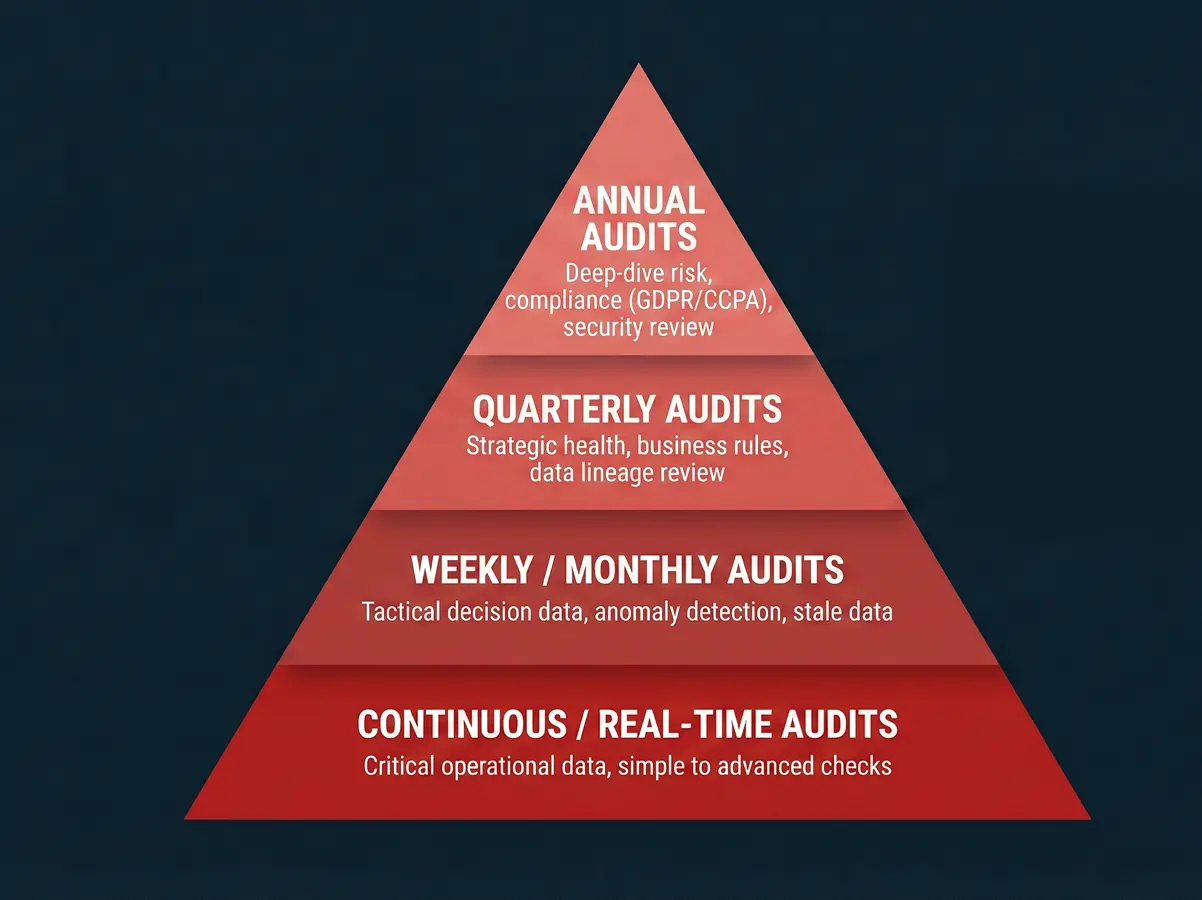

A tiered approach to data quality audits, ranging from real-time operational checks to annual strategic compliance reviews.

A tiered approach to data quality audits, ranging from real-time operational checks to annual strategic compliance reviews.

Data quality audits should be performed regularly. Exact frequency depends on the importance of audited data and checks themselves. It makes sense to split audits into four different tiers.

Continuous / real-time audits

Real-time audits should verify quality of critical operational data where errors can break production systems. These audits are not comprehensive, but serve as data quality checks throughout the data pipeline.

A helpful way to frame these is the three levels of checks:

- simple checks (non-empty tables, not-null constraints on key columns, and basic format/enum validations like valid dates or state codes),

- intermediate checks (aggregation-based checks such as uniqueness via

GROUP BY) - advanced checks (week-over-week row counts and anomaly detection on metrics like p95/p5 values for most trusted, “gold-tier” datasets).

Here's a video describing all checks you might need.

In practice, continuous audits start with the dirt simple checks everywhere, layer in intermediate checks on key entities, and reserve the more complex, noisy advanced checks for the highest-impact pipelines.

Weekly / monthly audits

This tier of audits aims to keep reporting data used for tactical decisions in check: things like marketing dashboards, sales reports, and similar analytics outputs.

They focus on spotting anomalies in data distributions (for example, a sudden drop in average order value), validating allowed value lists, and identifying stale data that needs refreshing. In short, this level of auditing looks after the overall health of operational reporting data.

Quarterly audits

This tier of audits happens roughly quarterly and focuses on strategic and process-level data health. It’s the moment to step back and ask whether data still reflects how the business actually operates.

Quarterly audits typically involve reviewing business rules and metric definitions (are they still valid?), verifying data lineage for critical reports and KPIs end to end, and retiring unused tables, dashboards, and pipelines that create noise or risk. They usually align with financial reporting cycles, executive KPI reviews, and compliance preparations, ensuring that the numbers leaders rely on are both trustworthy and grounded in up-to-date logic.

Annual audits

This tier of audits runs annually and focuses on deep-dive risk, compliance, and long-term data hygiene. Yearly audits zoom out to look at how securely and responsibly data is managed across the organisation.

They typically include a full security and access review, formal compliance checks and certifications (GDPR, CCPA, HIPAA, or industry-specific standards), and a thorough refresh of master data management (MDM) practices. This is also when teams should review archival data strategies, audit PII handling end to end, and revisit data governance policies to ensure they still match regulatory requirements and the company’s risk appetite.

“Ad-Hoc” Audits

The last tier of data quality audits doesn’t follow the schedule. Specific events may trigger an immediate audit. Do not wait for the next yearly review if any of the following occur:

- System Migrations: Immediately after moving data from a legacy system to a new warehouse (e.g., moving from on-prem SQL to Snowflake).

- Mergers & Acquisitions: When integrating a new company's data, as their definitions of "customer" or "active user" likely differ from yours.

- New Regulatory Requirements: If a new privacy law (like a state-level Privacy Act) passes, audit your data retention and PII classification immediately.

- Implementation of new tech: Whenever you’re planning to roll out new, data-dependent technology across the organisation, a data audit should be your first priority before implementation.

Establishing key metrics for a data quality audit

Before starting an audit, teams must define what they are measuring and what are their acceptance thresholds. If an audit doesn’t have pre-defined metrics, it can find errors without explaining if they matter.

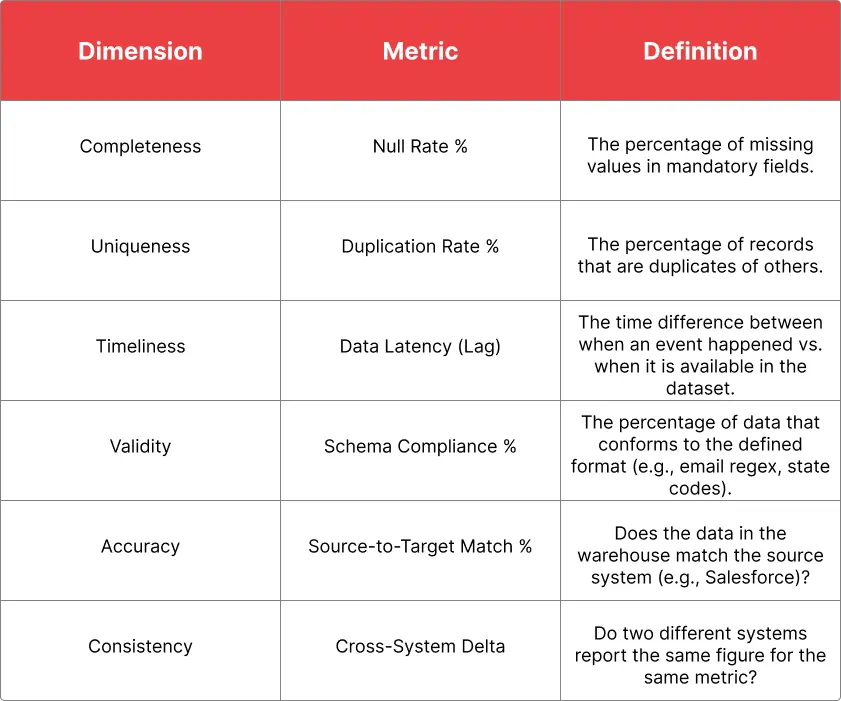

The “Big 6” Core Quality Dimensions

These are the industry-standard dimensions, aligned with the DAMA framework that tell if the data is trustworthy.

We won’t re-explain each data quality dimension here, as that’s been covered many times and isn’t the focus of this article. Instead, the table below lists practical metrics and definitions you can use to measure each dimension during your data quality audits.

The six core dimensions of data quality with practical metrics to measure them during an audit.

The six core dimensions of data quality with practical metrics to measure them during an audit.

Pipeline health metrics

These metrics measure the stability of the infrastructure. You should establish baselines for these before the audit so you can spot anomalies.

Volume drift

This measures the standard deviation of row counts over time. For example, if the pipeline normally ingests 10k rows/day and today it ingested 2k, the overall data quality is compromised even if the 2k rows are perfect.

Schema changes

Measures the frequency of unannounced changes to table structure such as new column, type changes, etc.

Failed job rate

The percentage of pipeline runs that fail or require manual intervention per week

Business impact metrics

Establishing these metrics is crucial to quantify the cost of bad data and secure the resources for data quality tools and future improvements.

Cost of poor data quality

Estimate the revenue lost or operational costs associated with errors (e.g., Return-to-Sender cost for bad addresses or Man-hours spent fixing reports).

Data usability / trust score

A survey metric (1-10) asking key stakeholders: “How confident are you that this dashboard is correct?”.

Preparing for a data quality audit

Preparation is arguably more important than the audit itself. If you start auditing without a plan, you will likely get overwhelmed by the sheer volume of "imperfect" data and struggle to prioritise what matters.

Here is an action plan for a successful data quality audit, moving from strategy to technical setup.

Defining the audit scope

The biggest mistake is trying to audit all data at once. Teams need to be specific about a business domain or a process they want to start the audit with.

- Select High-Impact Data: Focus on the "Critical Data Elements". These are the fields that, if wrong, cost money or break laws.

- Define the Breadth: Are you looking at just the data warehouse, or the entire pipeline from entry to dashboard?

- Set a Time Window: Are you auditing the last 30 days of data, or the entire historical archive? Start with recent data, as it's usually more actionable.

Gather the documentation

It’s hard to audit data without knowing what “correct” looks like. A standard to compare against is vital for a data quality audit.

- Find (or create) data dictionaries: Data documentation should clearly state how the data should be formatted and structured. For example, a revenue column should be a decimal with 2 places. If data dictionaries don’t exist, the auditor needs to create a temporary standard before the audit.

- Map data lineage: If there’s an error in the warehouse, the auditor needs to know which upstream system (Salesforce, Shopify, SAP) created it.

- Review business rules: verify that specific logic like: "A customer is only 'Active' if they have logged in within 30 days." is in place and working.

Stakeholder survey

Surveying people that use that data on a daily basis is a good idea because they often know the details.

Asking the following questions might help further down the line:

- “Which report do you trust the least?”

- “Is there a dashboard you stopped using because the numbers don’t add up?”

- “Do you have any separate tables with data because you don’t trust the dashboards?”

Also, questioning engineers about pipelines or APIs that break most often might provide insight into overall health of data infrastructure.

4. Technical Environment Setup

Finally, the team needs to ensure that all stakeholders have required access and tools ready so the audit doesn't stall on day one.

- Read-Only Access: Ensure the audit team has read-only permissions on the production tables. Auditing with write permissions is a bad idea because of the risk of accidental deletions.

- Sandboxing: A temporary schema or "sandbox" to write audit results and error tables without polluting the production database.

- Tool Selection: Gathering the toolkit for a successful audit:

- SQL scripts: Good for one-off deep dives.

- Python/Pandas: Good for complex statistical analysis.

- DQ Tools (Great Expectations, Soda): Good for automated rule checking.

A step-by-step data quality audit process

Having the metrics, documentation, and technical details sorted out means we can now move into the core execution phase.

Step 1. Execute defined rules and metrics

Run the automated checks for 6 dimensions we discussed earlier against the data selected in your scope.

- Running the checks: Here we execute SQL queries, scripts, or use data quality tools on the scoped data.

- Recording raw counts: Then, it’s crucial to record the raw number of errors for each metric.

- Measuring timeliness: Another key thing is to document the latency of audited data pipelines against the target SLAs.

Step 2. Calculate the data quality scorecard

The next step is to convert raw error counts into a quantitative score that can be easily understood and tracked over time.

For each table and metric, we need to calculate the compliance percentage and compare it with acceptance thresholds.

For example, if the target for completeness is 98%, and we have a 2.5% null rate, the final score is 97.5% which is a fail.

The final scorecard should summarise the compliance percentage for every critical data element.

Step 3. Validate errors with business context

Not every error is critical. To identify their severity, errors need to be validated with business users that were interviewed during the preparation phase.

- Sample inspection: Manually inspect a sample of the raw error records. Does the error make the entire record unusable, or is it a minor cosmetic issue?

- Prioritisation: Using the feedback from domain experts assign a severity level to each failing metric. The next steps should be focused on eliminating high-severity failures.

Step 4. Conduct root cause analysis

For all high-severity failures trace the error back to its origin using the data lineage documentation gathered previously.

Here, the main goal is to identify the failure point:

- Is it a source system error (e.g. bad data entry)?

- Is it a pipeline error (e.g. a script is dropping columns or applying incorrect logic)?

- Is it a business process error (e.g. users aren’t following the data entry guidelines)?

The final deliverable for the end of this stage should be a log that maps each major data quality defect to its root cause.

Step 5. Develop the remediation plan

At this stage, the auditor works closely with the data engineering and data governance teams to create a plan to address failures.

Failures and fixes aren’t equal, some will take more time and effort than others.

- Data cleansing: Plan immediate, one-time fixes for the existing dirty data. For example, you can populate known missing values with a script, fix date formats, etc.

- Pipeline fixes: Identify the required code changes, source system improvements, or user training to prevent the failure from happening again.

- Ownership: Assign an owner and a deadline to each fix.

Step 6. Final audit report

Present the findings and the remediation plan to stakeholders and executive sponsors. The report should be structured around the impact that the fixes will bring, not just errors.

For example, 10% of records in the “Leads” table are duplicates, which leads to an estimated $50,000 per month in wasted ad spend.

During the report, schedule a re-audit for later to confirm that the fixes addressed the initial issues and improved the Data Quality Scorecard.

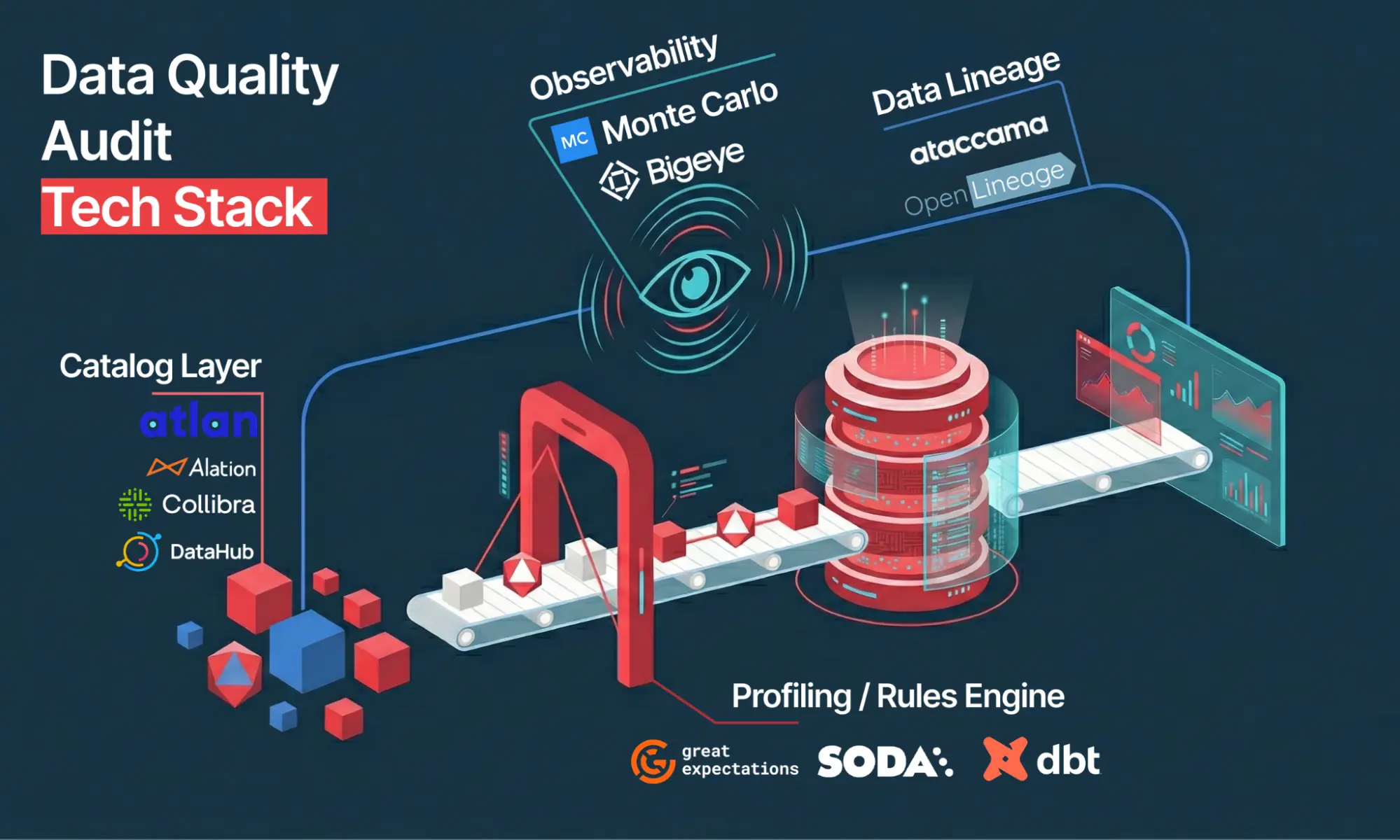

Technology Stack for Data Quality Audits

A comprehensive data quality audit requires more than just SQL queries. Often, it demands a stack that can shed light on invisible issues. Below, we’ll cover the core technologies that define a modern data quality audit toolkit.

The modern technology stack for data quality audits, integrating cataloging, profiling, observability, and lineage tools to detect and fix invisible data issues.

The modern technology stack for data quality audits, integrating cataloging, profiling, observability, and lineage tools to detect and fix invisible data issues.

Data catalog and metadata management

A data catalog is your "map" for the audit. It tells you where data lives, who owns it, and what it should look like.

Tools:

- Atlan

- Alation

- Collibra

- DataHub (Open Source)

Catalogs provide a centralised business glossary and automatically tag sensitive data, making it easier to identify regulatory non-compliance and verify adherence to business rules.

For instance, Tide, a UK-based digital bank, used Atlan to automate the identification and tagging of GDPR-relevant data. This shifted a process that previously took 50 days of manual work into just hours of automated rule-based tagging, significantly accelerating their compliance audit cycles.

Profiling and data quality rules engines

These are the workhorses that test the data against your expectations. They scan tables to generate statistics and validate specific rules.

Tools:

- Great Expectations (Open Source)

- Soda

- dbt (built-in testing)

These tools automate the “sample inspection” part of the audit. Instead of writing 100 manual SQL queries, you can define and verify a batch of expectations.

Engineering teams often use Great Expectations to create "Data Docs", automatically generated reports that serve as an audit trail. A common pattern is to run these checks before data enters the warehouse (the "landing zone") so that bad data is flagged immediately, preventing pollution of downstream analytics.

Data lineage

Lineage tools visualise the flow of data. If an audit reveals an error in a dashboard, lineage tells you exactly which upstream system and table caused it.

Tools:

- Ataccama

- OpenLineage

- Select Star

These tools allow to trace back errors through dozens of layers of SQL transformation to the raw source. Besides, automated lineage tools instantly highlight the “impact area”, showing that a single broken table actually affects 12 different reports and dashboards.

Real-time monitoring and observability

Observability tools enable continuous auditing. They can detect volume anomalies or schema drifts in real time and send alerts lowering the time spent on future audits.

Tools:

- Monte Carlo

- Bigeye

- Metaplane

If your audit reveals that data is generally accurate but often late, an observability tool can track “freshness” SLAs automatically. For example, Calendly used Monte Carlo to catch and fix silent data failures in their pipelines. The team managed to reduce data-related bug tickets by 40%.

Read our guide where we reviewed and compared the top data quality tools on the market.

In-House Team vs. External Partners: Choosing the Right Audit Approach

For organisations that do not yet have established data quality processes, launching a comprehensive audit can feel like trying to repair a plane while flying it. The decision often comes down to a trade-off between building internal capabilities and expertise and hiring immediate external assistance.

The most effective approach depends on the scale of the audit, its strategic goal, and internal data engineering and data quality expertise.

The case for in-house: routine maintenance

Certain audits must live inside the organisation. Real-time monitoring and weekly operational checks can’t be outsourced effectively in the long run. In-house data engineers and analysts are closest to the business logic and they are the ones who need to spot that a marketing feed is down or that sales figures look unusual on a Tuesday morning.

However, if you are starting from zero, expecting your current team to suddenly build a robust auditing framework on top of their daily workload is a recipe for burnout and overlooked errors.

The case for partners: Strategic transformations and "Day One" setup

For high-stakes initiatives, such as AI implementation, cloud migration, or regulatory compliance audits, bringing in an experienced partner is usually the safer and more cost-effective choice.

External partners bring a proven roadmap and a fresh set of eyes. They have conducted assessments for other companies and know exactly where the skeletons in the closet usually hide, whether that’s biased training data for AI or non-compliant PII handling.

The cost and speed advantage

On the surface, hiring a consultancy seems more expensive than using internal staff. However, when building from the ground up, the hidden costs of an in-house approach add up quickly:

- Tooling costs: You have to evaluate, purchase, and configure the entire technology stack (catalogs, DQ tools) from scratch.

- Learning curve: Your team will spend weeks or months learning how to audit, rather than actually auditing.

External partners come equipped with their own technology stack and proprietary scripts. They can deploy their toolkit immediately, bypassing the months of setup required to build an internal framework.

The Hybrid Model: Audit as a training ground

The best engagement model for a company with no existing processes is to use the external partner as a catalyst for internal growth.

- The "Heavy Lift": The partner conducts the deep-dive strategic audit (e.g., AI Readiness) to identify risks and clean the data for the immediate project.

- The Setup: Instead of just handing over a PDF report, the partner sets up the infrastructure for continuous, real-time audits within your environment.

- The Handoff: The partner trains your internal team on how to interpret these alerts and conduct the smaller, weekly and quarterly data audits.

By the time the engagement ends, you will have audit results as well as a trained team that knows how to run future audits independently.

Planning to adopt AI? Start with a data quality audit.

Implementing AI is the ultimate stress test for your data infrastructure. While standard audits keep your daily reporting reliable, preparing for AI requires a significantly deeper level of preparation. Launching an AI initiative without a comprehensive, specialised audit is often the primary reason projects fail, resulting in models that hallucinate, drift, or simply cannot scale in production.

Vodworks bridges this gap with our specialised AI Readiness Assessment. We analyse your complete data maturity across technology, people, and processes. Our experts conduct a deep-dive review of your current architecture to ensure it can handle the computational and quality demands of modern AI. We identify specific bottlenecks, from "shadow data" silos to governance gaps, that standard internal audits might overlook, ensuring your infrastructure is robust enough to support advanced machine learning workloads.

Don’t let hidden data issues derail your innovation roadmap. Partner with Vodworks to get a clear, actionable improvement plan before you write a single line of model code.

Get in touch to discuss your AI use case and a practical path forward.

Talent Shortage Holding You Back? Scale Fast With Us

Frequently Asked Questions

How do you handle different time zones?

With a team of 150+ expert developers situated across 5 Global Development Centers and 10+ countries, we seamlessly navigate diverse timezones. This gives us the flexibility to support clients efficiently, aligning with their unique schedules and preferred work styles. No matter the timezone, we ensure that our services meet the specific needs and expectations of the project, fostering a collaborative and responsive partnership.

What levels of support do you offer?

We provide comprehensive technical assistance for applications, providing Level 2 and Level 3 support. Within our services, we continuously oversee your applications 24/7, establishing alerts and triggers at vulnerable points to promptly resolve emerging issues. Our team of experts assumes responsibility for alarm management, overseas fundamental technical tasks such as server management, and takes an active role in application development to address security fixes within specified SLAs to ensure support for your operations. In addition, we provide flexible warranty periods on the completion of your project, ensuring ongoing support and satisfaction with our delivered solutions.

Who owns the IP of my application code/will I own the source code?

As our client, you retain full ownership of the source code, ensuring that you have the autonomy and control over your intellectual property throughout and beyond the development process.

How do you manage and accommodate change requests in software development?

We seamlessly handle and accommodate change requests in our software development process through our adoption of the Agile methodology. We use flexible approaches that best align with each unique project and the client's working style. With a commitment to adaptability, our dedicated team is structured to be highly flexible, ensuring that change requests are efficiently managed, integrated, and implemented without compromising the quality of deliverables.

What is the estimated timeline for creating a Minimum Viable Product (MVP)?

The timeline for creating a Minimum Viable Product (MVP) can vary significantly depending on the complexity of the product and the specific requirements of the project. In total, the timeline for creating an MVP can range from around 3 to 9 months, including such stages as Planning, Market Research, Design, Development, Testing, Feedback and Launch.

Do you provide Proof of Concepts (PoCs) during software development?

Yes, we offer Proof of Concepts (PoCs) as part of our software development services. With a proven track record of assisting over 70 companies, our team has successfully built PoCs that have secured initial funding of $10Mn+. Our team helps business owners and units validate their idea, rapidly building a solution you can show in hand. From visual to functional prototypes, we help explore new opportunities with confidence.

Are we able to vet the developers before we take them on-board?

When augmenting your team with our developers, you have the ability to meticulously vet candidates before onboarding. We ask clients to provide us with a required developer’s profile with needed skills and tech knowledge to guarantee our staff possess the expertise needed to contribute effectively to your software development projects. You have the flexibility to conduct interviews, and assess both developers’ soft skills and hard skills, ensuring a seamless alignment with your project requirements.

Is on-demand developer availability among your offerings in software development?

We provide you with on-demand engineers whether you need additional resources for ongoing projects or specific expertise, without the overhead or complication of traditional hiring processes within our staff augmentation service.

Do you collaborate with startups for software development projects?

Yes, our expert team collaborates closely with startups, helping them navigate the technical landscape, build scalable and market-ready software, and bring their vision to life.

Our startup software development services & solutions:

- MVP & Rapid POC's

- Investment & Incubation

- Mobile & Web App Development

- Team Augmentation

- Project Rescue

Subscribe to our blog

Related Posts

Get in Touch with us

Thank You!

Thank you for contacting us, we will get back to you as soon as possible.

Our Next Steps

- Our team reaches out to you within one business day

- We begin with an initial conversation to understand your needs

- Our analysts and developers evaluate the scope and propose a path forward

- We initiate the project, working towards successful software delivery