AI Compliance: Navigating the Global Regulatory Landscape and Mitigating Fines

August 28, 2025 - 15 min read

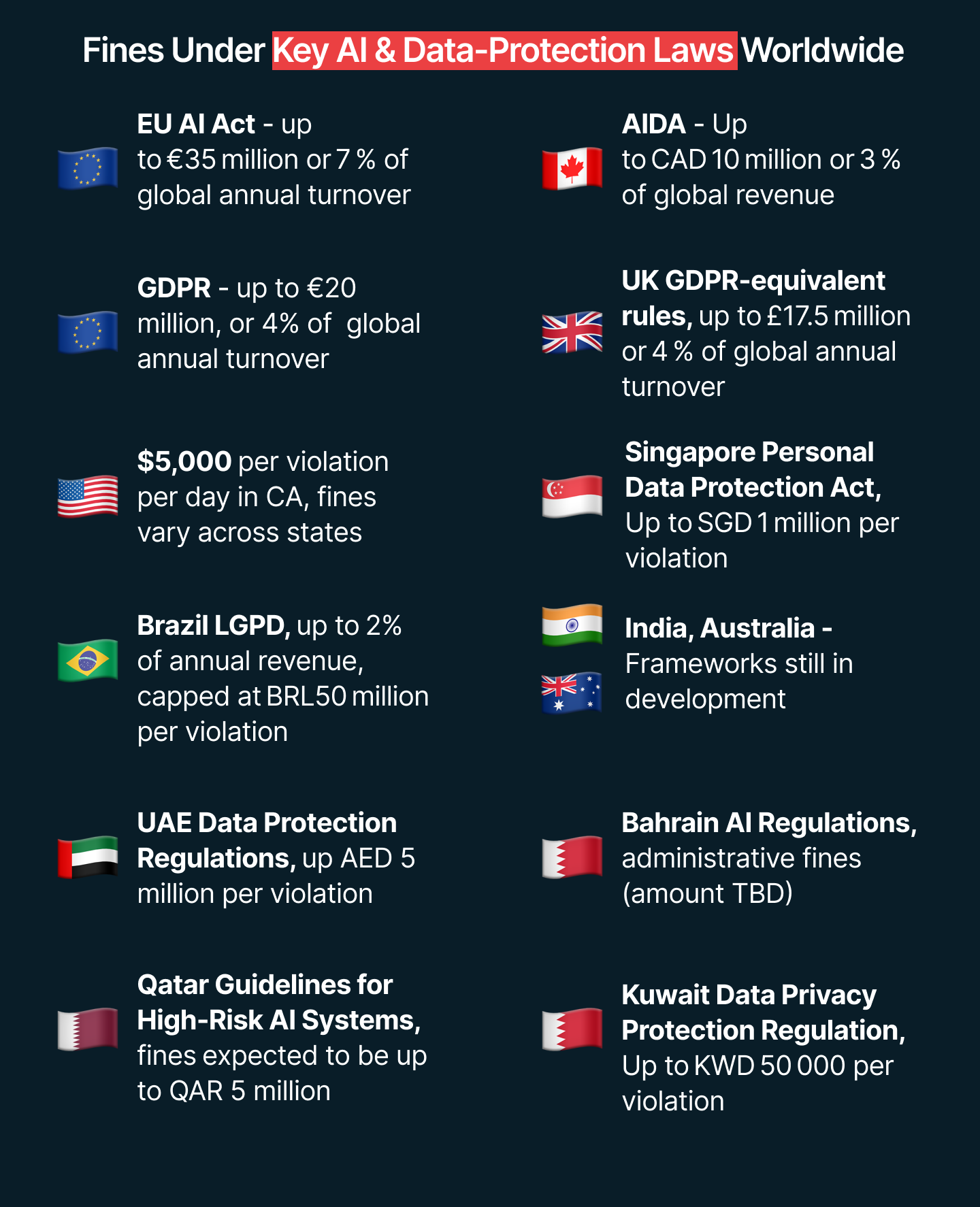

In an era where a flawed AI model or a breach of any one of dozens of regulations can cost €35 million and wipe out shareholder value overnight, mastering AI compliance is the surest path to sustainable growth.

In this article, we’ll map the regulatory terrain in key jurisdictions, highlight where AI rules are already in force and where they’re still taking shape, and outline practical steps companies can take to stay ahead in a rapidly evolving compliance landscape.

The information in this article is provided for general informational purposes only and does not constitute legal advice. Regulatory requirements vary by jurisdiction and evolve rapidly; you should consult qualified legal counsel to obtain advice tailored to your specific circumstances before making any decisions or taking any actions related to AI compliance.

What Is AI Compliance?

Artificial-intelligence compliance is the strategic discipline of ensuring that every model, dataset, and workflow you deploy aligns with legal mandates, ethical norms, and industry standards.

It is the organisational muscle that transforms AI risk into reliable, scalable innovation. At its core, AI compliance confirms that systems respect data-privacy rules such as GDPR, avoid discriminatory outcomes, remain transparent to regulators, and are robust against cyberattacks.

Because global statutes define obligations by role, four terms matter for every C-suite leader:

- Provider – the entity that develops or commercially releases an AI system.

- Deployer – the organisation that uses an AI system under its own authority.

- Importer/distributor – parties that bring systems to market or make them available in the supply chain.

- AI system – any machine-based tool that learns from input data and influences real-world environments.

Together, these definitions frame how responsibilities such as risk management, transparency, and market surveillance are allocated across the lifecycle.

Why AI Compliance Demands Board-Level Attention

Legal and Financial Exposure

The EU Artificial Intelligence Act levies fines of up to €35 million, or 7 % of worldwide turnover, for prohibited practices like social-scoring or untargeted facial-image scraping. Comparable enforcement powers are now surfacing in the United States, China, and the Middle East, turning non-compliance into an existential threat rather than a regulatory irritation.

Ethical Imperatives and Bias Risk

Ethical Imperatives and Bias Risk

High-impact use cases—credit scoring, hiring, medical triage—can amplify historical bias if training data are skewed. Mitigation is now a fiduciary duty: the AI Act mandates documented bias testing and continuous monitoring for high-risk AI systems such as biometric identification or critical-infrastructure management.

Privacy and Cyber-Security

AI apps become a major source of data leakage. According to the ZScaler 2025 Data@Risk report, AI apps like ChatGPT, Copilot, and Claude have become significant data loss sinks, with 4.2 million data loss violations across all AI tools.

On the one hand, limited AI literacy poses risks, as a lot of people feed sensitive data to LLMs without thinking of the consequences.

On the other hand, AI widens the attack surface with new vectors, such as prompt injection, model exfiltration, and data poisoning. This opens new opportunities for cybercriminals.

Considering that the average cost of a data breach for a company is $4.88 million, security should be a non-negotiable board priority.

Transparency and Accountability

Regulators are moving away from soft-law principles. In the EU Act alone, three hard requirements now define the compliance baseline for high-risk systems:

- Tamper-proof event logs

- Plain-language instructions explaining the model’s purpose, limits, and risks

- Human in the loop

However, these principles aren’t new. Back in 2019, France’s Commission on Informatics and Liberty (CNIL) imposed a €50 m fine on Google for failing to give users a clear explanation of how its ad-personalisation algorithms process data. Countries and jurisdictions take transparency seriously and having opaque definitions can ultimately lead to fines.

Consumers aren’t sleeping on this matter as well. 86% of users prefer brands that publish transparent AI policies. However, almost 40% of organisations flag AI explainability as the main risk, and only 17% said they’re actively mitigating it. If this trend doesn’t change, it won’t be overlooked by regulators and consumers.

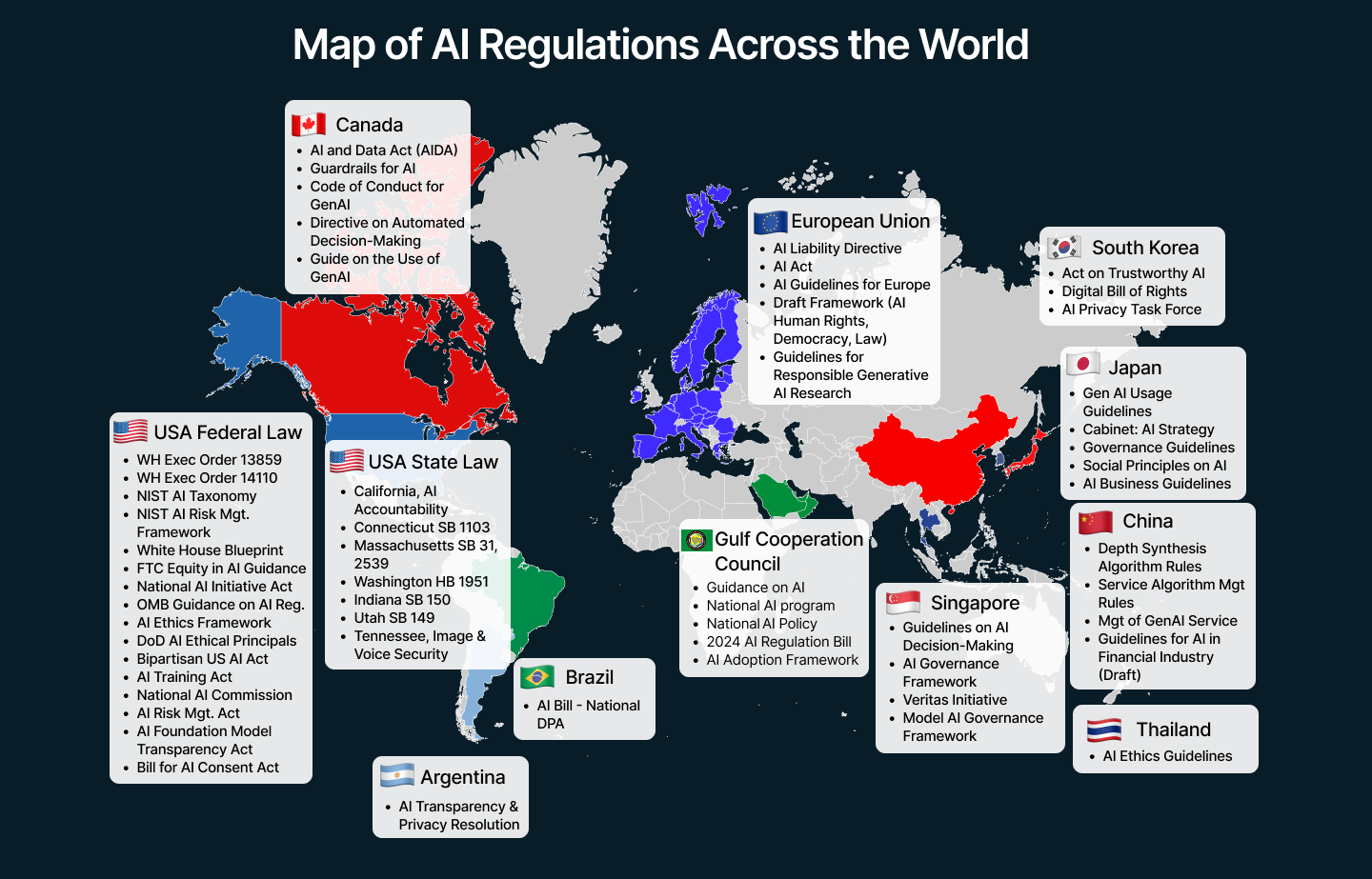

The Global Regulatory Picture

The EU Artificial Intelligence Act (✅ Comprehensive AI regulation)

The EU Artificial Intelligence Act (✅ Comprehensive AI regulation)

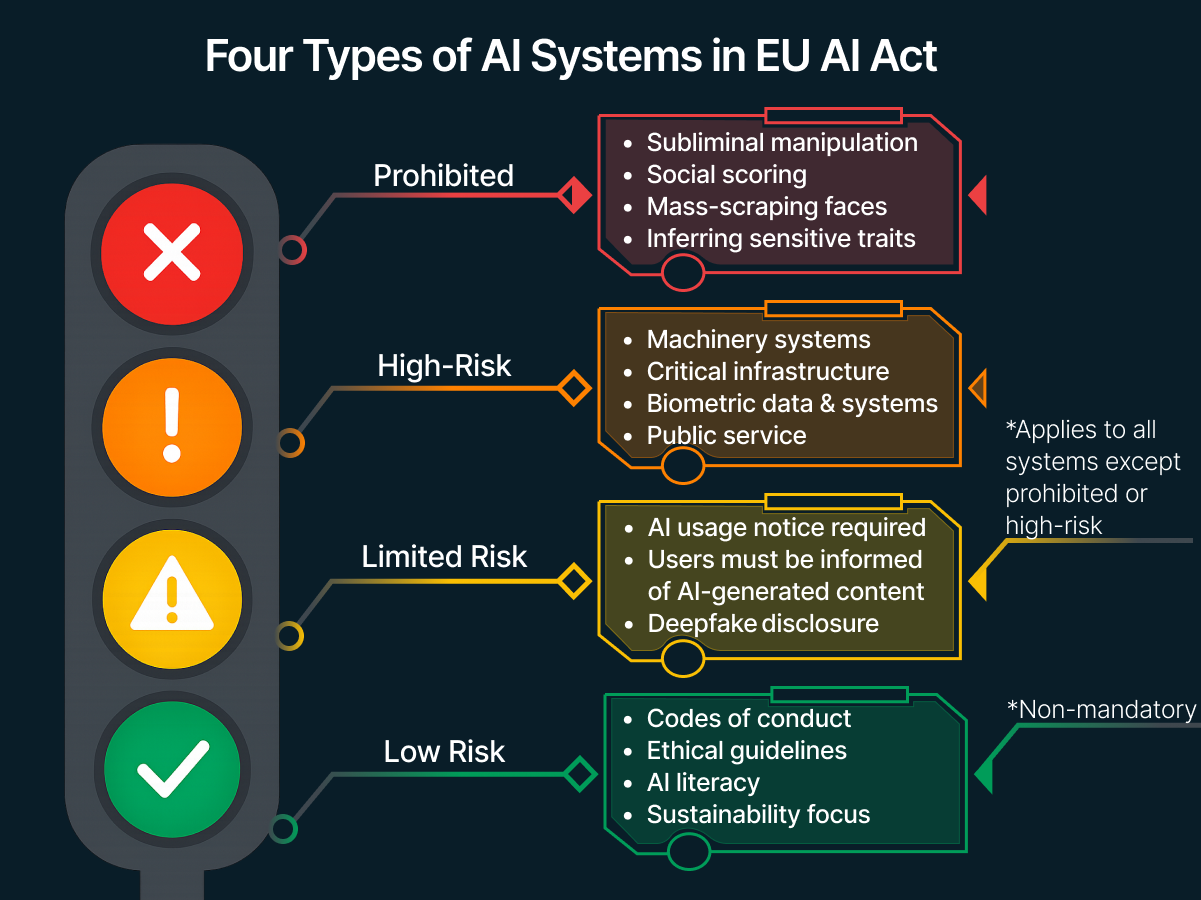

The world’s first comprehensive AI statute took effect on 12 July 2024 and will apply in full by 2 August 2026. It classifies systems by risk tiers:

Prohibited

- AI systems deploying subliminal or purposefully manipulative techniques.

- AI systems exploiting vulnerabilities to materially distort behaviour and cause harm.

- AI systems for social scoring systems of natural persons on social behaviour or personal characteristics.

- Risk assessment of natural persons to predict criminal offence likelihood.

- AI systems that create and populate facial recognition databases through untargeted scraping of facial images from the internet or CCTV footage.

- AI systems that are intended to infer the emotions of natural persons in the workplace and educational institutions, unless for medical or safety reasons.

- Biometric systems categorising individuals based on biometric data to deduce or infer sensitive attributes, such as race, political opinions, religious beliefs, sexual orientation, etc.

High-Risk Systems

High-risk AI systems are those with a significant harmful impact on the health, safety, and fundamental rights of people. An AI system is classified as high-risk if it is:

- Intended to be used as a safety component of a product, or itself a product covered by specific Union harmonisation legislation listed in Annexe I, and is required to undergo a third-party conformity assessment. Examples include machinery, medical devices, toys, lifts, and automotive equipment.

- Standalone AI systems used in specific predefined areas where they pose a high risk of harm to health, safety, or fundamental rights, considering the severity and probability of harm, such as biometric systems, critical infrastructure, education, law enforcement, public service, etc.

Limited-Risk Systems

AI systems classified under a "limited risk" profile are not subject to the extensive, mandatory requirements of high-risk systems; however they must adhere to transparency rules to ensure users are aware of their interaction with AI and the nature of the content generated or inferred.

Some of these rules include:

- Providers must ensure that natural persons interacting directly with an AI system are informed that they are doing so.

- Providers of AI systems that generate synthetic audio, image, video, or text content must ensure that the output is marked in a machine-readable format and detectable as artificially generated.

- Deployers of AI systems that generate or manipulate text for the purpose of informing the public on matters of public interest must disclose that the text has been artificially generated.

- Deployers of AI systems that generate or manipulate image, audio, or video content to create a "deepfake" must clearly and distinguishably disclose that the content has been artificially created or manipulated.

Minimal-Risk Systems:

- Providers of AI systems that are not high-risk are encouraged to create codes of conduct.

- Providers and, where appropriate, deployers of all AI systems (high-risk or not) and AI models are encouraged to voluntarily apply additional requirements, such as ethical guidelines for trustworthy AI, considerations for environmental sustainability, measures promoting AI literacy, etc.

- For minimal-risk systems, Regulation (EU) 2023/988 on general product safety applies as a "safety net" to ensure that such products are safe when placed on the market or put into service.

Publishers of General-Purpose AI Models (GPAIMs) also have to comply with the act and operate with the transparency and control expected of any other vendor. Here are some of the baseline duties:

- Maintain a living technical dossier: capture architecture, parameter count, training-data provenance, energy use, evaluation metrics, acceptable-use limits, and release history. The full file should be supplied to the EU AI Office or national authorities on request.

- Embed copyright safeguards: enforce a Directive 2019/790-compliant training policy; publish a template-based public summary of training content so right-holders can verify use.

- Demonstrate and document compliance: follow harmonised standards or recognised codes of practice; co-operate with regulators’ information requests; open-source models may skip the full dossier and integrator pack but must still meet copyright policy and training summary duties unless classed as systemic risk.

- Extra obligations for systemic-risk models (≥ 10²⁵ FLOPs or other Annexe XIII triggers): conduct state-of-the-art evaluations and red-team tests before and after release; run continuous Union-level risk assessment and mitigation; report serious incidents to the AI Office without delay; harden the model and infrastructure against adversarial attacks, data poisoning and theft.

Penalties for non-compliance are harsh. Fines range up to €15 million or 3 % of global turnover and potential EU market withdrawal.

The overlap with GDPR

The overlap with GDPR

The AI Act extends GDPR. Data minimisation, lawful basis, and DPIA requirements still apply, with extra transparency duties for high-risk models. Non-compliance with either regime compounds fines.

Bottom line: The EU has established the world’s most comprehensive, risk-tiered AI rulebook, robust enough to curb harmful or opaque systems, yet flexible enough to let responsible innovation thrive.

The US AI Legislative Landscape (Fragmented regulation, laws vary across states)

While the European Union has introduced AI frameworks, the United States is still in the process of developing its own AI regulations.

Despite the lack of a single overarching law, several key legislative measures and principles are in place.

National Artificial Intelligence Initiative Act of 2020

Purpose: This Act directs AI research, development, and assessment across federal science agencies.

Impact: It established the American AI Initiative, which coordinates AI activities across federal institutions.

AI in Government Act & Advancing American AI Act:

Purpose: These acts direct federal agencies to advance AI programs and policies.

Impact: They promote AI adoption within government operations.

Blueprint for an AI Bill of Rights

These principles serve as a framework for responsible AI development and use, focusing on:

1. Safe and effective systems: AI systems must be rigorously tested and continuously monitored.

2. Protection against algorithmic discrimination: Safeguards are to be put in place to prevent unjustified bias and discrimination by AI systems.

3. Protection against abusive data practices: Users are empowered with control over their data when AI systems are involved.

4. Transparency: Users should be informed about the use of AI and its potential effects.

5. Opt-out and human review: Individuals should have options to opt out of AI systems and seek human intervention when AI-driven decisions significantly impact them.

National Institute of Standards and Technology AI Risk Management Framework (AI RMF)

The NIST AI Risk Management Framework (AI RMF) is a voluntary, flexible guide that helps organisations “identify, measure, manage, and monitor” AI risks throughout the system lifecycle.

It translates high-level principles, such as explainability, fairness, and security, into practical activities, checklists, and maturity tiers. By embedding AI RMF into governance, you gain a repeatable process for threat modelling, bias testing, and post-deployment oversight, ensuring your AI initiatives remain trustworthy, resilient, and aligned with evolving regulatory expectations.

The Biden Administration’s Executive Order 14110 (Rescinded):

This EO directed every major federal agency to hard-wire “safe, secure and trustworthy” AI practices into policy and procurement.

It ordered the creation of chief AI officers, expanded NIST’s risk-management guidelines, and tasked DHS, Commerce and other departments with watermarking, cybersecurity and critical-infrastructure defences.

The EO also sought to spur competition, protect civil and labour rights, and cement America’s global AI leadership while curbing misuse such as discrimination, IP theft and deepfakes. Widely considered Washington’s most comprehensive AI-governance action to date, it was rescinded hours after President Trump’s 20 Jan 2025 inauguration.

Sector-specific rules (HIPAA, FCRA) layer additional duties for healthcare, finance, and education.

Bottom line: The US still runs on a patchwork of agency mandates, sector statutes and voluntary frameworks rather than a single AI law. The rescission of Executive Order 14110 underlines that federal direction can swing with each administration.

Until Congress codifies a comprehensive regime, executives must treat NIST’s AI RMF, emerging agency rules and the Blueprint for an AI Bill of Rights as the de facto standard, building internal governance now so they can pivot swiftly when formal legislation finally lands.

UK AI Regulation (🧩Fragmented regulation, principles‑based, sector‑led; no single binding AI law yet).

The UK’s AI regulatory strategy combines flexibility and pro-innovation with a clear commitment to public trust and risk management. Rather than a single, prescriptive law, the UK relies on cross-sectoral principles, delivered initially on a non-statutory basis, and enforced by existing regulators in their respective domains.

At its core are five guiding principles (as per AI Regulation White Paper, Aug 2023):

- Safety, Security & Robustness: AI systems must function reliably, resist cyber-threats, and undergo continuous risk assessment in line with strong privacy and security practices.

- Transparency & Explainability: Providers should supply proportionate information on purpose, data sources, decision logic and performance—potentially via product-labelling—to ensure users and regulators understand system behaviours.

- Fairness: Systems must comply with the Equality Act 2010 and UK GDPR, avoiding discrimination and unfair market outcomes through rigorous bias-testing and data governance.

- Accountability & Governance: Organisations need clear oversight structures and expert governance bodies to assign responsibility across the AI lifecycle.

- Contestability & Redress: Individuals and affected parties must be able to challenge AI-driven decisions and access accessible remedies, whether through formal or informal channels.

Instead of creating new AI regulators, the UK empowers sector-specific authorities, including the FCA, ICO, Ofcom and CMA, to interpret these principles under their existing mandates. Initially non-binding, the framework may later become binding once Parliament allocates time.

Central coordination is provided by the AI Policy Directorate within DSIT, which monitors framework efficacy, horizon-scans emerging risks, supports regulatory sandboxes, and promotes international interoperability.

The White Paper’s functional definition of AI—focusing on ‘adaptivity’ and ‘autonomy’—aims to remain future-proof. However, a March 2025 Private Members’ Bill proposes more granular definitions for “AI” and “generative AI” and establishes a standalone AI Authority.

Among other, less formal frameworks is the King’s Speech in July 2024 that hinted at the creation of binding rules for the most powerful models. Also, the Labour government’s AI Opportunities Action Plan (Jan 2025) with investments in compute, data libraries, and AI Growth Zones, and proposals to enshrine an AI Safety Institute as a statutory body. Complementary efforts, such as the OECD, G7 and Council of Europe engagements, ensure the UK’s approach aligns with global best practice.

Bottom line: By regulating context-specifically rather than by technology class, the UK framework encourages responsible innovation. However, the government’s official statutory framework and comprehensive AI Regulations Act remain in draft form, with “due regard” duties and any binding legislation still awaiting parliamentary time.

AI Governance Across the Middle East

The Gulf Cooperation Council (GCC) has embraced AI as a strategic lever for economic diversification and public-service innovation. Yet rather than a one-size-fits-all law, each member state is charting its own course—blending national strategies, soft-law principles and sectoral directives to balance rapid adoption with ethical guardrails.

UAE: Pioneering the Strategy (🧩Fragmented, strategy + sector and data‑protection rules, no single AI statute)

- National AI Strategy 2031: the document outlines the plans to embed AI across healthcare, education and transport, supported by high-level AI Ethics Principles and an AI Charter on transparency, accountability and human oversight.

- Legal scaffolding includes the Federal Law on the Project of the Future Nature and Abu Dhabi’s Advanced Technology Council law—granting broad powers to regulate emerging AI projects—and data-protection under the 2021 Personal Data Law and DIFC’s 2023 autonomous-systems rule.

Saudi Arabia (🧩Fragmented frameworks + PDPL, but no dedicated AI law)

- Saudi Arabia’s AI Adoption Framework offers a roadmap for integrating AI responsibly across sectors, complemented by Generative AI Guidelines for government and private actors.

- Although no dedicated AI statute exists, the Personal Data Protection Law (fully in force) extends robust privacy controls to AI pipelines, with detailed guidance notes clarifying compliance for automated processing.

Qatar (🧩 Fragmented finance‑specific AI rules, general strategy, no umbrella act)

- Qatar’s 2019 National AI Strategy is notable for its legally binding AI Guidelines governing AI use by Qatar Central Bank–licensed financial institutions, imposing oversight and governance duties on trading algorithms, credit-scoring models and robo-advisors.

Bahrain (🚧 In Draft April 2024 AI Regulation Bill not yet enacted)

- Bahrain’s April 2024 draft AI Regulation Law would be the region’s first to define licensing, civil liability and administrative fines for non-compliance.

Oman (🚧 In Draft National AI Policy still in consultation)

- Oman’s National AI Policy (in public consultation) promises binding principles on privacy, transparency, and accountability once finalised.

Outlook for the Region: The GCC’s fragmented mode reflects both the urgency of AI-driven growth and caution over potential harms. As generative AI accelerates and cross-border data flows expand, formal legislation becomes a top priority. Businesses must stay agile: implement best practices now under existing guidelines and anticipate a more unified, enforceable regime in the next legislative cycle.

International Standards

- ISO/IEC 42001:2023 defines an AI Management System, the governance analogue to ISO 27001 for information security.

- ISO/IEC 23894:2023 provides guidance on managing risks associated with AI systems, helping organisations identify, assess, and mitigate potential risks related to AI algorithms and models.

- ISO/IEC 22989:2022 outlines terminology and concepts related to AI, providing a common understanding of AI-related terms.

Strategic Challenges on the Road to Compliance

The Tech is Moving Much Faster than Governance Programmes

The mismatch in the pace of tech and rule-making forces companies to hit compliance goals in a field that is constantly changing.

Even the basic concepts, such as “What counts as AI?” vary by jurisdiction, complicating risk classification and due diligence checklists.

Meanwhile, AI obligations also overlap with data privacy, intellectual property, and cybersecurity laws, further complicating assessments for companies.

Complex AI supply chains add another knot of complexity: Who is responsible when an autonomous model built on open-source components goes wrong?

All of these questions and complexities will hopefully be addressed later, when the regulatory landscape will settle down. For now, the only durable defence is robust internal governance, namely continuous horizon-scanning, dynamic risk management, and cross-functional expertise that can pivot as each new statute or regulator redefines the entire landscape.

AI Systems Need to be Hardened Against Modern Threat Vectors

AI systems act in a high-threat landscape. They absorb vast, sensitive datasets and make autonomous decisions, traits that magnify existing threats and spawn new attack vectors.

Malicious actors also make use of LLMs. With AI, they can auto-generate flawless phishing campaigns, while poor access controls lets them exfiltrate training corpora or tamper with model weights.

Core vulnerabilities include:

- Data poisoning (corrupting training sets)

- Adversarial inputs (tricking models at inference)

- Shadow AI (rogue tools deployed outside corporate security stacks)

Multi-cloud deployments further widen the blast radius.

Regulators are responding:

- The EU AI Act mandates “appropriate cybersecurity” for high-risk systems;

- The UK’s principle of “safety, security and robustness” urges continuous penetration testing and model-risk reviews.

- ISO/IEC 27001, 31700 and the new AI-specific ISO/IEC 42001 provide blueprints for layered defences: encryption of model artefacts, strict entitlements, red-team drills and post-deployment monitoring.

Now the ball is on the companies’ and teams’ side to stick to regulations and safety protocols.

However, there are certain concerns that all AI vendors will adhere to regulations, as it’s virtually impossible to keep all companies accountable. There are thousands of small startups building their products around LLMs, and it’s not unusual for mid-sized and large companies to use those products, without knowing their security infrastructure or even registering it with IT departments.

In this situation, data leaks and safety issues are highly likely, so it’s essential to verify the AI vendors your teams are using, understand what data is sent to those vendors, and whether or not this vendor/tool is on the radar of your IT team.

The Black Box of Algorithmic Bias and Ethical Risks

AI systems inherit the prejudices of their training sets. If training data is biased towards a certain group of people, objects, or events, models will replicate and amplify those patterns.

We conducted five experiments to see how bad data influences LLMs’ behaviour. Read this article to learn more about the influence of bad data.

That is why CV-screeners have sidelined female candidates and loan engines have penalised borrowers of colour.

The deeper danger is opacity: high-capacity models discover correlations unseen to humans, making it hard to understand causation or assign accountability when decisions go wrong.

Regulators understand the importance of this problem and mandate bias-tested, statistically representative datasets, exhaustive technical documentation, and human-in-the-loop oversight for every high-risk system.

Forward-thinking firms should now treat bias mitigation like cybersecurity, as a continuous, measurable, board-level critical process.

Eleven Best-Practice Pillars for Enterprise AI Compliance

- Embed Governance at Board Level. Establish an AI Ethics & Compliance Committee with clear reporting lines to the audit committee.

- Adopt Continuous Risk Management. Operationalise NIST’s four-step loop (Preparation → Detection and Analysis, Containment, Eradication, Recovery → Post-incident Activity), extending it to adversarial-robustness and data-lineage threats.

- Elevate Data Quality. Use representative, bias-tested datasets; employ synthetic data when real sets pose privacy or copyright risks.

Unsure how to make your data AI-ready? Start with our guide on Six Pillars of Data Readiness.

- Guarantee Transparency. Maintain comprehensive technical documentation and disclose AI involvement to users, especially for video generation and facial-recognition deployments.

- Keep Humans in the Loop. High-risk models must allow override, pause, or rollback by authorised personnel.

- Engineer for Cyber-Resilience. Follow NCSC/CISA “secure-by-design” principles to mitigate prompt injection, model theft, and supply-chain exploits.

- Automate Logging and Traceability. Continuous record-keeping simplifies post-market monitoring and supports liability defence.

- Upskill the Workforce. AI literacy programmes satisfy AI Act Article 4 obligations and close the Gartner-identified trust gap.

Ready to turn your people into AI innovators? Get the Workforce Upskilling Checklist in this article and start the transformation today.

- Use Regulatory Sandboxes. Test novel algorithms under supervisory oversight to accelerate market entry without legal overhang.

- Engage with the AI Office and National Authorities. Early dialogue clarifies expectations on conformity assessment, market surveillance, and systemic-risk reporting.

- Publish Voluntary Codes of Conduct. For non-high-risk use cases, codes on environmental impact, inclusivity, and stakeholder participation future-proof your portfolio.

Fast‑Track Your AI Compliance with Vodworks

If AI compliance is slowing your AI adoption roadmap, Vodworks can help. Our AI Readiness Package aligns your data, models, and people with the very rules discussed above, so you can launch with confidence instead of legal uncertainty.

Focused discovery workshop

- Pinpoint high‑risk use cases and quick‑win mitigations.

- Map current data flows against obligations like GDPR, ISO 42001, and the EU AI Act.

- Audit existing AI experiments for red‑flag compliance issues.

Data layer

Our team examines data quality, lineage, security controls, and whether your current platform can scale. Demonstrating strong governance here shows regulators that you collect only what is lawful and necessary, protect it with robust cybersecurity, and can trace every record back to its source.

Team layer

We review the org chart and run a skills‑matrix, role‑by‑role gap analysis. This proves you have qualified people “in the loop”, clearly assigned responsibilities, and the documented accountability regulators expect for high‑risk AI activities.

Governance layer

We scrutinise policies, privacy safeguards, continuous monitoring, and tamper‑proof audit trails. Solid governance satisfies transparency obligations, underpins risk‑management requirements, and supports the post‑market‑surveillance duties embedded in modern AI regulations.

Board‑ready deliverables

You get a maturity scorecard, gap‑by‑gap action plan, and grounded cost & timeline estimates, everything the board needs to green‑light the next phase and prove due diligence to regulators.

Option to execute

If you choose, you can keep the same specialists on hand to:

- Build secure data pipelines and vaults for sensitive or copyrighted assets.

- Stand up ML‑Ops and compliance‑automation workflows that generate tamper‑proof logs.

- Ship your first production model on schedule—already audit‑ready.

Book a 30‑minute compliance strategy call with our AI solution architect. We’ll review your use case, data estate, and team readiness, then outline next steps tailored to your AI maturity level.

Talent Shortage Holding You Back? Scale Fast With Us

Frequently Asked Questions

How do you handle different time zones?

With a team of 150+ expert developers situated across 5 Global Development Centers and 10+ countries, we seamlessly navigate diverse timezones. This gives us the flexibility to support clients efficiently, aligning with their unique schedules and preferred work styles. No matter the timezone, we ensure that our services meet the specific needs and expectations of the project, fostering a collaborative and responsive partnership.

What levels of support do you offer?

We provide comprehensive technical assistance for applications, providing Level 2 and Level 3 support. Within our services, we continuously oversee your applications 24/7, establishing alerts and triggers at vulnerable points to promptly resolve emerging issues. Our team of experts assumes responsibility for alarm management, overseas fundamental technical tasks such as server management, and takes an active role in application development to address security fixes within specified SLAs to ensure support for your operations. In addition, we provide flexible warranty periods on the completion of your project, ensuring ongoing support and satisfaction with our delivered solutions.

Who owns the IP of my application code/will I own the source code?

As our client, you retain full ownership of the source code, ensuring that you have the autonomy and control over your intellectual property throughout and beyond the development process.

How do you manage and accommodate change requests in software development?

We seamlessly handle and accommodate change requests in our software development process through our adoption of the Agile methodology. We use flexible approaches that best align with each unique project and the client's working style. With a commitment to adaptability, our dedicated team is structured to be highly flexible, ensuring that change requests are efficiently managed, integrated, and implemented without compromising the quality of deliverables.

What is the estimated timeline for creating a Minimum Viable Product (MVP)?

The timeline for creating a Minimum Viable Product (MVP) can vary significantly depending on the complexity of the product and the specific requirements of the project. In total, the timeline for creating an MVP can range from around 3 to 9 months, including such stages as Planning, Market Research, Design, Development, Testing, Feedback and Launch.

Do you provide Proof of Concepts (PoCs) during software development?

Yes, we offer Proof of Concepts (PoCs) as part of our software development services. With a proven track record of assisting over 70 companies, our team has successfully built PoCs that have secured initial funding of $10Mn+. Our team helps business owners and units validate their idea, rapidly building a solution you can show in hand. From visual to functional prototypes, we help explore new opportunities with confidence.

Are we able to vet the developers before we take them on-board?

When augmenting your team with our developers, you have the ability to meticulously vet candidates before onboarding. We ask clients to provide us with a required developer’s profile with needed skills and tech knowledge to guarantee our staff possess the expertise needed to contribute effectively to your software development projects. You have the flexibility to conduct interviews, and assess both developers’ soft skills and hard skills, ensuring a seamless alignment with your project requirements.

Is on-demand developer availability among your offerings in software development?

We provide you with on-demand engineers whether you need additional resources for ongoing projects or specific expertise, without the overhead or complication of traditional hiring processes within our staff augmentation service.

Do you collaborate with startups for software development projects?

Yes, our expert team collaborates closely with startups, helping them navigate the technical landscape, build scalable and market-ready software, and bring their vision to life.

Our startup software development services & solutions:

- MVP & Rapid POC's

- Investment & Incubation

- Mobile & Web App Development

- Team Augmentation

- Project Rescue

Subscribe to our blog

Related Posts

Get in Touch with us

Thank You!

Thank you for contacting us, we will get back to you as soon as possible.

Our Next Steps

- Our team reaches out to you within one business day

- We begin with an initial conversation to understand your needs

- Our analysts and developers evaluate the scope and propose a path forward

- We initiate the project, working towards successful software delivery