Before You Bet Big on AI: Validating Ideas With A 4-Week AI PoC Sprint

October 9, 2025 - 15 min read

A lot of teams have “done something with AI” lately. Teams organise hackathons, upskilling programs, demos, pilots, and so on. However, very few projects actually reach real users.

While ~75% of businesses have experimented, only ~9% report enterprise-grade deployments, leaving many stranded in production purgatory, stuck between a flashy demo and a reliable system that customers and auditors can trust.

An AI Proof of Concept (PoC) is how you break that stalemate: a time-boxed, low-risk experiment that proves a specific AI approach can hit a measurable business target on representative data.

In this article, we’ll clarify what an AI PoC is, how it differs from PoV/Pilot/MVP, why PoCs fail, how to scope one properly, and a practical four-week sprint plan to validate your idea and escape production purgatory.

What is AI PoC?

An AI PoC (Proof of Concept) is a time-boxed, low-risk experiment that validates whether a specific AI approach can meet your measurable business target.

Targets often include:

- Reducing handling time

- Boosting conversion

- Automating compliance checks

You run it on representative data, define acceptance criteria and guardrails up front (accuracy, cost per outcome, security), and decide to pilot, pivot, or pause based on evidence.

💡 A focused AI Proof of Concept lets you test feasibility and business impact fast, before you commit budget, talent, and reputation.

PoCs are more important than ever. The AI landscape has become harsher. A few years ago, companies spent money left and right to get AI going in their organisation; now, that trend has shifted.

PoCs are more important than ever. The AI landscape has become harsher. A few years ago, companies spent money left and right to get AI going in their organisation; now, that trend has shifted.

Researchers report that 95% of GenAI pilots never reach production. Executives, aware of this, have become more careful with spending money.

At the same time, cost control is biting. 85% of companies miss AI cost forecasts by 10%+, and 25% miss by 50%+. This happens because PoCs were done incorrectly, or weren't done at all.

Now, AI leaders will feel more pressure to prove feasibility, unit economics, and risk posture before committing to pilots. That’s why, AI PoCs will only become more valuable to understand whether the idea is feasible in the given budget and time constraints, and, if not, quickly move to testing another one.

PoC vs PoV vs Pilot vs MVP – Choosing the Right Model

Not every innovation needs a proof-of-concept, sometimes you should be proving value or going straight to a pilot.

Ask yourself a question: What uncertainty do you need to resolve – technical feasibility or business value? And how quickly do you need real-world feedback?

Let’s take a closer look at every type of early delivery model.

Proof of Concept (PoC)

PoC is used when you’re unsure if the AI technology can meet the requirements.

A PoC is a focused experiment to answer “Will this tech work for our problem?” It typically has a narrow scope (one use case, perhaps even a subsystem), and is done in a sandbox.

PoC case study: Walmart (“My Assistant” for corporate associates). Walmart ran a PoC to accelerate drafting, summarisation, and idea generation for desk-based teams. Success metrics included time-to-first-draft and user satisfaction on everyday workflows. Outcome: progressed from vision to a working solution in ~60 days, then expanded access across 10+ countries in 2024.

As a rule, a PoC comes first when venturing into new tech. It’s low-cost and low-risk by design.

Proof of Value (PoV)

PoV is used when feasibility is known (perhaps the AI is off-the-shelf or already shown to work) but you need to prove business value in your context. A PoV is essentially a pilot with a hypothesis about ROI.

It asks, “If we implement this for real, will it deliver tangible benefits worth the investment?” That means deploying in a real environment with real users/data, but in a limited way.

Crucially, success is measured in business metrics (revenue lift, cost reduction, customer satisfaction), not just technical model metrics.

PoV Case study: National Grid (UK) × Sensat (digital twins for infrastructure). Sensat’s team ran a PoV to speed early-stage planning with 2D/3D site visualisation. Success metrics included avoided site visits, faster decision cycles, and interoperability with existing workflows. Outcome: rolled Sensat out to multiple UK projects after the PoV.

In the GenAI era, PoVs and PoCs should be inseparable, because the tech is usually workable – the real question is value and usability.

Pilot

Pilots are used when you’re ready to test the operationalisation at a small scale. A pilot is like a final rehearsal for production: you run the nearly-final solution in a production-like environment (real users, live data), but to a limited group or region. The goal is to find any scaling or integration issues and verify adoption in practice.

Real-world example: Morgan Stanley × OpenAI (GPT-4 advisor assistant). Morgan Staley’s team ran a PoV of an internal RAG assistant for wealth advisors to speed research lookup and brief creation. Success metrics included time to retrieve answers, quality/compliance review outcomes, and advisor adoption rates. Strong usage and value signals led to a scale-up as part of the broader OpenAI partnership.

Outcome: You gather operational data: latency, reliability, user feedback, impact on workflow, etc. Pilots often last longer (several months) and involve more resources than a PoC.

Pilots assume both feasibility and value have been predicted; it’s about confirming them in the real world and refining before broad rollout. It’s also a chance to work out support, security, and MLOps processes on a small scale.

MVP (Minimum Viable Product)

MVP comes into play when you already have confidence in feasibility and basic value, and want to deliver a usable slice of the product to real users to iterate.

In AI context, a “thin-slice MVP” might be appropriate if, say, you’ve done AI projects before or you’re using well-understood AI (like a pre-built model) and the value case is strong. The MVP would be a minimal implementation in production, focusing on one core functionality.

Real-world example: Spotify’s song preview queue. One of the recent examples of MVPs is Spotify’s song previews. Spotify released a feature that allows users to listen to 30-second snippets of songs in a TikTok-like format. When testing, Spotify’s team rolled out this feature to selected groups, rotating access across different user groups, to test the feature adoption. Eventually, users loved the feature, so Spotify made it available to all users.

To decide on which approach is best for you, consider uncertainty vs. investment:

- If you have high technical unknowns, do a PoC.

- If tech is known but value is unproven, do a PoV/pilot focusing on metrics.

- If both tech and value are known, you can jump to an MVP or even full implementation

Also factor urgency: a competitive or regulatory pressure might mean you parallel-track PoC and value demonstration in a pilot to accelerate learning.

Finally, when looking for a technical partner, beware of terminology confusion. Vendors sometimes offer “free pilots” that are essentially sales demos. If someone offers a free pilot, it’s likely just a live demo, not a true pilot.

A real pilot or PoV requires commitment from both sides, often a paid engagement, because you’re customising and measuring in your context.

Book a 30-minute scoping call with our team to determine the right approach for your AI initiative, you can. We’ll help map your uncertainties to the optimal validation method, whether PoC, PoV, or pilot.

Why AI PoCs Fail and How to Avoid It

With alarming ROI forecasts from researchers, leaders and companies start to blame AI companies for overpromising and underdelivering. Some even say that it’s a bubble that is about to burst. And still, the bubble is here to stay, as the largest tech companies create trillions of dollars in economic value while creating a new era of AI infrastructure.

At Vodworks, we believe that the issue isn’t the AI itself. AI as a technology is fine, the problem lies in the approach. It’s in how the project is scoped and integrated.

With this in mind, let’s review some factor that might contribute to AI POC's failure:

With this in mind, let’s review some factor that might contribute to AI POC's failure:

Undefined success metrics

Teams dive in without a clear North Star metric. Success becomes a moving target (“we’ll know it when we see it”), leading to shifting criteria that doom the PoC.

Solution: To prevent this, teams need to define explicit KPIs up front. There needs to be an agreement between key stakeholders on what business outcome will prove the PoC’s value.

Data reality gap

PoCs often use a clean, static sample of data, but production data is messier.

In the pilot stages, teams might discover the model can’t handle live inputs with missing fields or duplicate entries. These infrastructure surprises often derail deployment.

Solution: do a data assessment in advance. Check data quality, representativeness, and accessibility. If the PoC used a curated dataset, plan a phase to feed in raw real-world data and see what breaks. Also, involve IT early to surface integration or latency issues.

Shadow stakeholders

It often happens that teams build AI solutions in silos; it technically works, but the frontline users or compliance team were never on board. These unconsulted stakeholders later block adoption or point out requirements the PoC ignored.

Solution: treat PoCs as cross-functional. Identify all stakeholders (business owners, end-users, compliance, IT, security) and get their input and buy-in early. PoCs that engage end users and domain experts from day one avoid the “applause then apathy” trap.

🚧 If those who would use or approve the AI aren’t involved in creation, they won’t trust or adopt it.

Feasibility ≠ Value

POC illusion lies in the fact that the development team thinks that if something can work it should work. However, it doesn’t always work this way.

A demo might show an ML model hitting 85% accuracy in lab tests, yet the project dies because it wasn’t solving an important problem or no one would use it. Many GenAI PoCs impress technically but never translate into ROI.

Solution: focus on the business case from the start. Ask “If this PoC succeeds, who will do what differently? What pain does it solve, and is that pain big enough?” Before you PoC, make sure you can answer key questions about business need and value.

As BDO’s Technology Partner, Jan Vermeersch, succinctly put it:

“No AI project has ever succeeded purely because the technology worked. Successful projects create measurable business value.”

Total-cost blind spots

PoCs never demonstrate the full cost picture. The ongoing cost for API calls quickly adds up when you scale the solution, model retraining comes into play, or even the need for new hires (MLOps engineers, etc.).

The concept “worked,” but scaling it triggers unanticipated costs that kill ROI.

Solution: do a rough total cost of ownership analysis even for the PoC phase and beyond. Factor in not just development time, but cloud compute, data pipeline setup, and compliance overhead.

Also consider operational costs: monitoring, retraining, customer support for the AI, etc. Many AI initiatives fail at CFO approval because hidden costs emerge. Gartner predicts at least 30% of GenAI projects will be abandoned at PoC by the end of 2025 mainly due to escalating costs or unclear value. Mitigate this by projecting costs for a pilot and 1-year scale, and having frank “go/no-go” criteria.

Defining a Scope of AI PoC

A strong AI PoC begins with a tight scope that links technology to business outcomes. Start by defining the main action your AI solution will perform, and the KPI you’ll move to prove it.

State the Problem

Frame the hypothesis plainly:

with your data and constraints, this approach can achieve X within Y time and Z budget.

Then, translate that into success criteria and guardrails. Your KPIs serve as acceptance thresholds you commit to before any build e.g., target win rate versus the current baseline, or resources spent performing a task versus pre-AI baseline.

Data Plan

Data scope should also be optimised for a PoC. Use only data that directly relates to your use case and that you’re legally allowed to use. List the exact sources, confirm you have the right to use them, where the data lives, how long you keep it, and who owns it.

Think of Guardrails Beforehand

Guardrails are the must-have safety rules that protect your brand and customers. They ensure that AI doesn’t output offensive content or expose personal data, can’t be tricked into breaking rules, gets facts right, and keeps a clear record of what happened.

To avoid any problems in the future pilot, PoCs need to treat security and privacy as if it was a production-level deployment. AI PoC that only works in the lab won’t pass procurement or risk review.

Narrow the scope

Don’t overengineer your PoC. Pick the simplest approach that can prove or invalidate your idea quickly: it can be a simple n8n workflow, RAG system, or any other solution that doesn’t require extensive engineering efforts.

Make sure to set economic boundaries. Cap costs per successful outcome and develop stop rules if you go over the budget.

Keep the scope tight: one workflow, one user type, one region. This way you can get a clean signal if your PoC is viable or not.

Write down the thresholds that trigger go/no-go and who has a final say in launching and scaling the project. (Product, Security, Finance).

4 Weeks of AI PoC Sprints: Validate Your Idea in a Month

Now let’s talk about the entire timeline of building your AI PoC. Here’s a four-week run that takes you from a sketch to a validated AI PoC.

AI PoC Preparation (2-3 days before the Sprint)

During the preparation phase it’s essential to determine what exactly you want to accomplish with your AI PoC. Before you commit to the project, you need:

- A north star metric that needs to grow

- A problem statement (who hurts, when, how often)

- A feasibility hypothesis (data + technique)

- A value hypothesis (time saved, revenue lift proxy)

If you’re struggling to identify a proper use case, look into what other organisations from your industry are doing with AI.

After you identify the primary use case, ask yourself this list of questions to eliminate any uncertainties during the PoC development phase:

- Who owns the outcome and will sign off on success or failure?

- Which single workflow, user type, and region are we targeting first?

- Which exact data sources will we use? Do we have legal rights to use that data

- What is our unit cost per successful outcome, and what’s the hard cap?

- What are the hidden costs (tokens, embeddings, storage, egress, eval runs, people time)?

- Who are the pilot users, how will we train them, and how will we capture acceptance/feedback?

- Do we have the talent and time in-house—or do we need a partner?

On the technical side, the team needs to finalise data access arrangements. For example, ensure the database team has delivered the data extract or API keys are obtained. Also, set up the development environment: this might mean provisioning a cloud instance, installing necessary libraries, and testing connectivity to any services (vector DB, APIs).

Your goal: On Day 1 of Week 1, you’re not blocked on credentials or environment issues.

Week 1: Planning Experiments Out and Prepping the Data

Week 1 goal: Stand up a reliable data pipeline, run baselines, and show a rough but working demo to stakeholders.

Translating goals into practical hypotheses

AI PoC should start with translating the business goal into a set of practical solution hypotheses. Your PoC team needs to check all ideas against data, infrastructure, and budget constraints to filter out the least viable and test the remaining ones during the AI PoC process.

Usually, the more ideas you have – the more testing material you have. However, you need to thoroughly filter them, or else having too many ideas might overrun the defined time limits or involve too many development resources.

Preparing experiments and hypotheses for solutions shouldn't take more than two days, if you manage to have a few productive workshops.

Setting up the data plumbing and attempting first runs

Next comes data aggregation. In the first few days, get the data flowing. Import or load the data into your environment.

If training a model, assemble the training dataset and ensure you can read it correctly. Essentially, get to “data in, data out” functionality early. By the end of the week, you should be able to run a simple query through the pipeline (even if using a trivial model or no model yet) to understand whether the pipeline is operating reliably.

When the plumbing is done, perform baseline model runs. Call a pre-trained model with a basic prompt on your dataset. Repeat several times with different inputs. This is your baseline run. It’s okay if the performance is poor. The goal of this run is to validate that the model runs, has access to your data, and produces output.

Checkpoint meeting with stakeholders

If your foundation is working properly, this is a good time to present a first raw demo to stakeholders. Demonstrate that the baseline is working with your org’s data. Even if the outputs are wrong, showing progress builds trust.

Week 2: Iteration and Enhancement

Week 2 goal: Polish the prototype from week 1 while adding essential guardrails and cost controls.

Prompt Tuning

With baseline in hand, iterate to improve results. For LLMs, this could mean refining the prompt (e.g. adding instructions like “cite the handbook in answers” or trying few-shot examples) or experimenting with parameters (temperature, etc.).

Make one change at a time and measure on your eval set. Track these experiments (a simple spreadsheet or using experiment tracking if you have it). You might find e.g. adding a 2-shot example to the prompt raises accuracy from 50% to 65%. Or reducing the model’s temperature cuts down hallucinations.

Augment with retrieval or external data

Week 2 is often when you add complexity like RAG or additional inputs. For instance, integrate that vector database retrieval into the pipeline and see if answers improve with added context.

Or incorporate an external API (for example, your PoC assistant calls a weather API to answer queries about weather). Ensure these integrations work and note their impact.

Guardrail Implementation

As you improve core performance, also implement guardrails. This includes content filters, thresholding, and logging of any concerning outputs.

Budget thresholding is another key guardrail that needs to be implemented during AI PoC. Keep an eye on token consumption and abnormal consumption spikes. If costs are higher than expected, now is the time to adjust.

If your PoC struggles with hallucinations, one guardrail pattern is to have the model phrase uncertain answers differently or include a disclaimer. Test these measures with your adversarial prompts and refine. By the end of Week 2, you want the model to hit the primary metric as best as possible, with basic safety nets in place.

Milestone

By the end of Week 2, aim to have a first fully working version. Your AI PoC system should meet (or nearly meet) the success criteria in a controlled test.

Week 3: Dry-Run, Evaluation, and Hardening

Week 3 goal: Validate with real users, harden reliability and security, and produce a simple ROI/cost model to inform go/no-go decision.

Stakeholder Dry Run

At the start of Week 3, involve a small group of end-users or stakeholders to try the PoC system. This could be a live demo or a hands-on session.

The idea is to get feedback beyond the team working on the PoC. Watch how they use it and note any confusion or additional needs. Often, business users will point out things like “the response is correct but too wordy” or “I really wish it could also do X.”

Manage expectations (it’s still a PoC), but this feedback is gold for both final tweaks and building buy-in.

Also, this is a chance to measure subjective satisfaction: do they trust the AI’s answers? Are they comfortable with the interface? Sometimes the metric goals are hit, but a user might say “I still wouldn’t use it because of reason Y.” Identify those reasons.

Cost & value analysis

By now, you have enough data to extrapolate costs and benefits on a small scale.

This week, do a sanity-check on ROI: If the PoC success criteria were met, what would scaling look like?

For example, if the AI handled 500 queries in the test with 70% success and cost $5 in API calls, scale that to your volume (50k queries would be ~$500 cost; is that affordable relative to savings from agent time?).

Also consider operational costs: how would we retrain or maintain this? How would infrastructure costs change?

This doesn’t have to be super detailed but be ready to discuss cost per transaction, etc., in the final report. Many PoCs fail to transition because AI development teams rarely quantify the value vs. cost clearly, you’ll stand out if you do. Keep in mind that CFOs are wary of indirect future ROI, so frame value in concrete terms.

Security review

If any security or compliance evaluation was pending, do a final check in Week 3. Run a vulnerability scan on your PoC app if it’s web-facing.

Ensure you followed through on any promises (e.g. you didn’t accidentally store personal data longer than allowed, etc.). This is more about preparing for a pilot, if the PoC looks good, you want no delays from InfoSec in moving ahead. Ideally, invite a security officer or compliance officer to see the PoC in action now, which often alleviates their concerns (they see it’s under control).

Pivot or polish

Week 3 is also decision time internally: if by now the core goal isn’t roughly met, consider pivoting or stopping early.

Don’t push to Week 4 demo with a failing approach, unless you have a strong reason it will suddenly improve. Perhaps try a different model or approach now if metrics are way off.

But if things are on track, use Week 3 to polish: fix any annoying bugs, improve the UI/UX for clarity (even simple labels or instructions for users), and ensure reliability for the final demo (add some error handling, etc.). Also prepare documentation, a brief user guide or technical note, so people can understand the PoC system.

Week 4: User Demo and Next-Step Decision

Week 4 goal: Present results and secure a scale/pilot decision with a lightweight rollout plan and owners.

Final Demo Day

This is the week you present the AI PoC outcomes. Conduct a live demo to the executive sponsors and relevant stakeholders (could be in a meeting or recording a video if async). Show not just the “happy path” but also discuss limitations honestly.

For example, demonstrate a typical use case where it shines, and perhaps also mention an edge case it can’t handle yet (and how you’d address that in a pilot).

Also present the metrics, e.g. “our AI answers ~65% of questions correctly within 2 seconds, meeting the 60% target we set, and flagged no compliance issues in testing.”

Prepare slides with key results to make the demo more visual.

Prior to the demo, gather positive user stories. For example, “Agent John tested it and said it solved his issue in 1 minute instead of 5, he was impressed.” This adds credibility that it’s not just numbers but also practically useful.

Decision review

After showing results, circle back to the decision criteria set at the start. Are the green lights met? This meeting is where you recommend to scale, pivot, or pause.

- If the PoC met success criteria: recommend proceeding to a larger pilot or limited production rollout.

- If partially met: propose what changes or additional work are needed (maybe extend PoC or pivot approach).

- If it failed: be ready to say so and suggest not moving forward (or exploring alternative solutions).

Having the pre-agreed criteria makes this easier, it’s not personal or sunk cost, it’s a planned outcome. Make sure the key decision-makers voice their agreement and next steps are clear (“Yes, let’s plan a pilot for the next quarter,” or “No, we’ll not pursue this further because value wasn’t proven”). Capture that outcome.

Scale roadmap

If the decision is positive, outline a high-level roadmap for scaling.

This doesn’t need to be very detailed yet, but should answer: what’s needed to go from PoC to pilot or production? Often this means “develop XYZ feature, integrate with system ABC, address identified risks, and implement MLOps for monitoring.”

Also timeline: “we can launch a pilot to 10% users in 3 months.”

Include a rough budget estimate if known. Essentially give stakeholders a glimpse of the path forward with phases (Pilot -> MVP -> Full launch) and resource needs. This helps them secure budget or approvals.

Throughout these weeks, communication is key. Have short stand-ups or syncs at least twice a week within the team, and weekly updates to stakeholders (even just an email) to keep confidence.

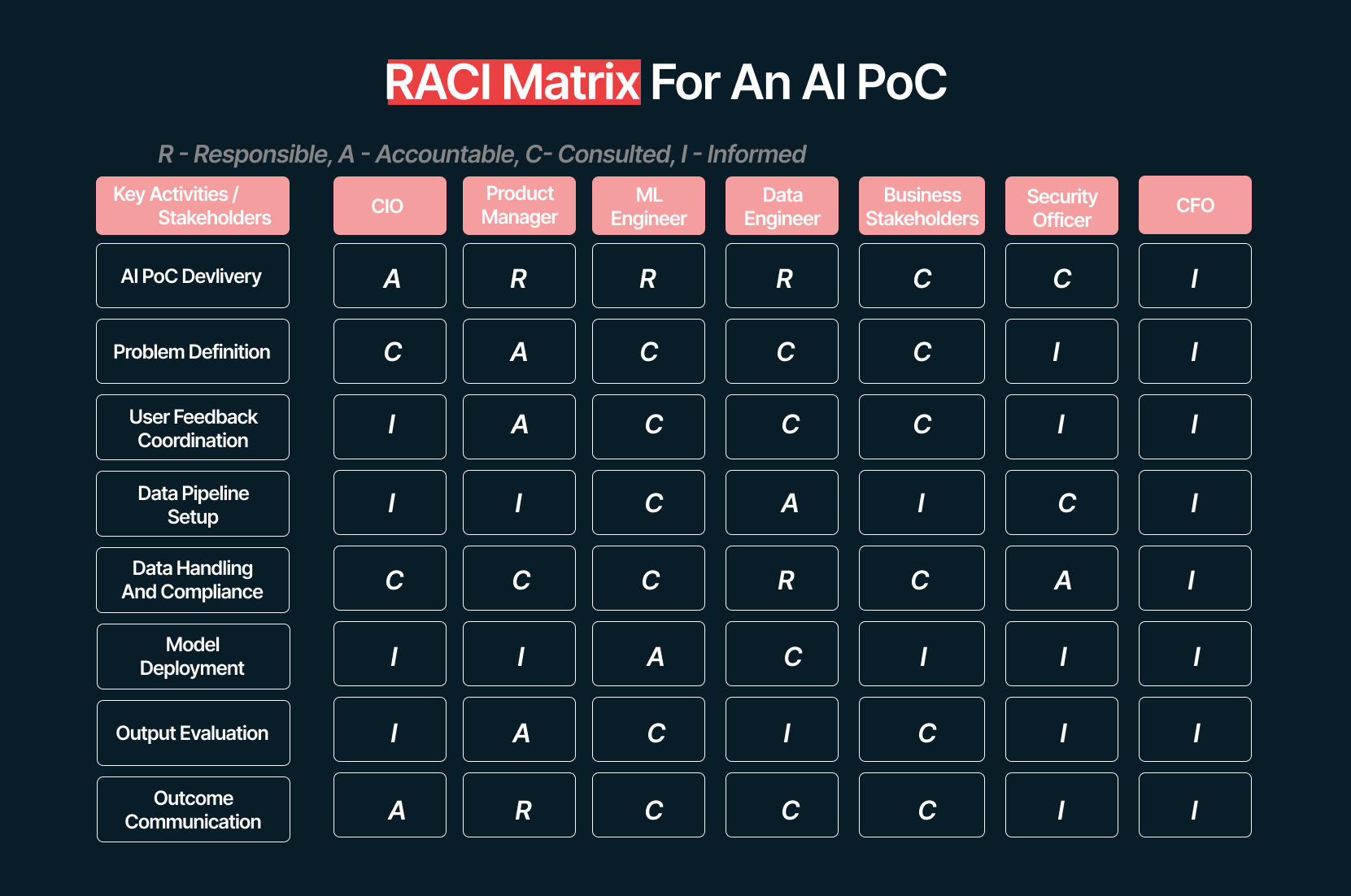

Time-boxing to 4 weeks encourages focusing on the highest-impact tasks first. Many teams also assign a RACI (Responsible, Accountable, Consulted, Informed) matrix for the PoC. A quick RACI table for an AI PoC may look like this:

This ensures everyone knows their role. For instance, the Product person might coordinate user evaluations and keep the user perspective in focus, the Engineer ensures tech delivery, Security signs off on data use, etc.

This ensures everyone knows their role. For instance, the Product person might coordinate user evaluations and keep the user perspective in focus, the Engineer ensures tech delivery, Security signs off on data use, etc.

Choose the Right Use Case for an AI PoC with Vodworks’ AI Readiness Package

Before starting your AI PoC, the smartest move is to make sure the right use case, data, and environment are ready to produce evidence against clear acceptance criteria (accuracy, cost per outcome, security). The Vodworks AI Readiness Package does exactly that without derailing your day-to-day operations.

- We begin with a use case exploration sprint that ranks candidate ideas by business impact, data availability, and expected payback.

- Our architects run an AI readiness assessment across your data estate, infrastructure, and current guardrails governance to surface gaps or risks that could block a PoC or inflate unit economics.

- We translate findings into a step-by-step improvement plan tied to your PoC, plus a board-ready summary, actionable roadmap, and toolchain recommendations that fit your budget cycle.

Prefer hands-on help? We can prepare your environment for a PoC, clean priority datasets, set up data pipelines, and harden access/observability, so your in-house team or chosen partner can execute the PoC and move to pilot with fewer surprises.

Book a 30-minute discovery call with a Vodworks AI solution architect to review your data estate, clarify acceptance criteria, and map the shortest path to a PoC that proves value.

Talent Shortage Holding You Back? Scale Fast With Us

Frequently Asked Questions

In what industries can Web3 technology be implemented?

Web3 technology finds applications across various industries. In Retail marketing Web3 can help create engaging experiences with interactive gamification and collaborative loyalty. Within improving online streaming security Web3 technologies help safeguard content with digital subscription rights, control access, and provide global reach. Web3 Gaming is another direction of using this technology to reshape in-game interactions, monetize with tradable assets, and foster active participation in the gaming community. These are just some examples of where web3 technology makes sense however there will of course be use cases where it doesn’t. Contact us to learn more.

How do you handle different time zones?

With a team of 150+ expert developers situated across 5 Global Development Centers and 10+ countries, we seamlessly navigate diverse timezones. This gives us the flexibility to support clients efficiently, aligning with their unique schedules and preferred work styles. No matter the timezone, we ensure that our services meet the specific needs and expectations of the project, fostering a collaborative and responsive partnership.

What levels of support do you offer?

We provide comprehensive technical assistance for applications, providing Level 2 and Level 3 support. Within our services, we continuously oversee your applications 24/7, establishing alerts and triggers at vulnerable points to promptly resolve emerging issues. Our team of experts assumes responsibility for alarm management, overseas fundamental technical tasks such as server management, and takes an active role in application development to address security fixes within specified SLAs to ensure support for your operations. In addition, we provide flexible warranty periods on the completion of your project, ensuring ongoing support and satisfaction with our delivered solutions.

Who owns the IP of my application code/will I own the source code?

As our client, you retain full ownership of the source code, ensuring that you have the autonomy and control over your intellectual property throughout and beyond the development process.

How do you manage and accommodate change requests in software development?

We seamlessly handle and accommodate change requests in our software development process through our adoption of the Agile methodology. We use flexible approaches that best align with each unique project and the client's working style. With a commitment to adaptability, our dedicated team is structured to be highly flexible, ensuring that change requests are efficiently managed, integrated, and implemented without compromising the quality of deliverables.

What is the estimated timeline for creating a Minimum Viable Product (MVP)?

The timeline for creating a Minimum Viable Product (MVP) can vary significantly depending on the complexity of the product and the specific requirements of the project. In total, the timeline for creating an MVP can range from around 3 to 9 months, including such stages as Planning, Market Research, Design, Development, Testing, Feedback and Launch.

Do you provide Proof of Concepts (PoCs) during software development?

Yes, we offer Proof of Concepts (PoCs) as part of our software development services. With a proven track record of assisting over 70 companies, our team has successfully built PoCs that have secured initial funding of $10Mn+. Our team helps business owners and units validate their idea, rapidly building a solution you can show in hand. From visual to functional prototypes, we help explore new opportunities with confidence.

Are we able to vet the developers before we take them on-board?

When augmenting your team with our developers, you have the ability to meticulously vet candidates before onboarding. We ask clients to provide us with a required developer’s profile with needed skills and tech knowledge to guarantee our staff possess the expertise needed to contribute effectively to your software development projects. You have the flexibility to conduct interviews, and assess both developers’ soft skills and hard skills, ensuring a seamless alignment with your project requirements.

Is on-demand developer availability among your offerings in software development?

We provide you with on-demand engineers whether you need additional resources for ongoing projects or specific expertise, without the overhead or complication of traditional hiring processes within our staff augmentation service.

Do you collaborate with startups for software development projects?

Yes, our expert team collaborates closely with startups, helping them navigate the technical landscape, build scalable and market-ready software, and bring their vision to life.

Our startup software development services & solutions:

- MVP & Rapid POC's

- Investment & Incubation

- Mobile & Web App Development

- Team Augmentation

- Project Rescue

Subscribe to our blog

Related Posts

Get in Touch with us

Thank You!

Thank you for contacting us, we will get back to you as soon as possible.

Our Next Steps

- Our team reaches out to you within one business day

- We begin with an initial conversation to understand your needs

- Our analysts and developers evaluate the scope and propose a path forward

- We initiate the project, working towards successful software delivery