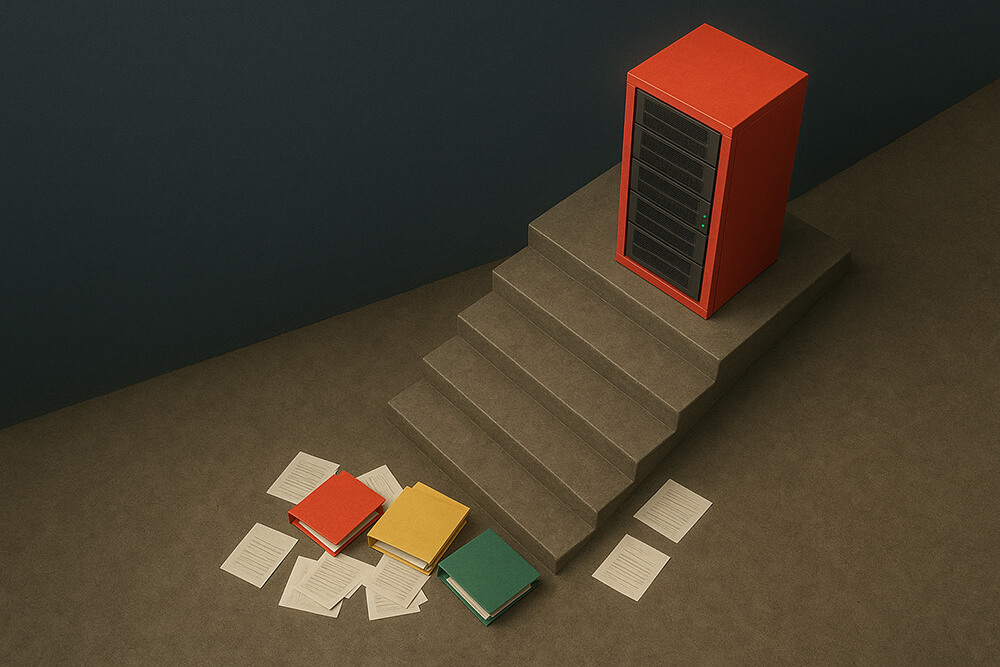

Stairway to Ideal Data: Best AI Readiness Assessment Frameworks + Ultimate Data Scoring Questionnaire

June 4, 2025 - 10 min read

Today, it’s never been easier to add AI to your stack. AI Models are a click away, ready to deploy via API, on-prem, or inside existing platforms. Yet, that very accessibility exposes a tougher challenge: incomplete, outdated, or unreliable data that stalls AI pilots because no one can trust the outputs.

This article helps you take an objective look at your data estate before you green-light the next AI project.

What you’ll learn

- How to self-score your data estate across availability, quality, governance, architecture, scale, and culture, using a checklist that fits straight into your current workflow.

- Where you stand on the industry maturity curve, through a concise comparison of leading AI-readiness frameworks (Gartner, Cisco, Fivetran, and more) and why most still fall short for Gen-AI workloads.

- Which fixes will help you reach AI readiness on your own and when it’s worth engaging an external partner?

Or, you can go directly to Vodworks AI Data Readiness Questionnaire to see your current position on the AI data maturity curve.

Why Your Data Estate Is the Bedrock of AI Success

Data Readiness is the Strongest Predictor of Enterprise AI Success

Michael Vad, Partner, CEO and Board Services Leader, Deloitte:

“Although enterprise investment in AI is increasing globally, many boards are still getting up to speed and are unsure how to govern its use.”

Leadership today doesn’t hesitate to throw money at AI to see quick results. But, money can’t be a defining factor in AI success for large orgs where data is stored in disparate spreadsheets, databases are outdated, and contextually rich information is left to die in silos.

Board-level reality: half of companies feel direct pressure from the CEO to “show AI impact in 12 months”. Yet 48 % admit they lack the in-house skill to manage production AI pipelines.

Unfortunately, teams can’t throw money at their data management infrastructure and expect it to magically start working. It’s yet another company-wide infrastructure project that needs a solid strategy, an iterative approach, and a specific set of skills.

According to Cisco’s research, the largest AI readiness gaps are observed in the fields of infrastructure, data, and data governance.

73% of companies report issues with data integration between data sources, AI tools, and analytics platforms. A surprising 29% of companies report that their tools are mostly not integrated.

Even under constant pressure, AI teams need to stay realistic and not overpromise when strategizing AI implementation. As seen from the same research, 76% consider that they have a good AI strategy in place or will have one in place shortly.

If you are one of those companies, consider taking a step back and ensuring that your strategy includes bringing your data infrastructure up to speed.

POCs ≠ Production Systems: There’s a Drastic Difference in Data Quality

Early pilots often look impressive because they run on carefully groomed sample sets. The collapse comes when the model is exposed to real-world data that is incomplete, duplicated or stale. That is why:

- 87% of data-science projects never reach production.

- Fewer than one-third (32 %) of enterprises feel “highly ready” on the data front.

Rob Zelinka, CIO of Jack Henry, captures the point:

“The most important thing is not just collect the data, but cleanse, categorize the data and make sure it’s in a usable format. Otherwise, you’re just paying to store meaningless data”

For CIOs, the message is straightforward: every hour spent on lineage, quality rules and accessible metadata shortens the path to business value.

Jumping Ahead of Your Data Comes with Financial Risks

Boards want headline-grabbing Gen-AI pilots. And they want them fast. But, until the issue with messy data is resolved, all future projects come with huge financial risks. Gartner, for example, puts the AI-project failure rate at 85%, driven mainly by poor or missing data.

The overall data management trends are disappointing:

- Bad data already wipes out 15–25% of revenue through re-work, delays and compliance penalties.

- 80% of companies still wrestle with basic cleansing, and 64% admit their data provenance tracking is weak.

With that kind of pressure, AI teams and leaders are left with two options: allocate more resources to data-management efforts, increasing initial costs and time to launch Gen-AI pilots; or build projects on the data foundation they already have, which might eventually prove unreliable and require even more spend on data infrastructure when they move from POCs to production deployment.

Vodworks’ Data-First AI Readiness Questionnaire

The Vodworks questionnaire distills our data-and-AI project expertise and the top AI readiness frameworks into one focused diagnostic. You can explore concise overviews of other great frameworks that served as a basis for this questionnaire later in this article.

Instead of quizzing you on organisational culture or broad change-management themes, it concentrates on the six data pillars that decide whether an AI proof-of-concept ever reaches production:

- Inventory & Catalog – can everyone find and trust the data?

- Accessibility & Integration – do pipelines land data quickly and reliably?

- Data Quality – are accuracy and timeliness measured—and enforced?

- Lineage & Observability – can you prove where every feature came from?

- Governance & Compliance – is privacy-by-design baked into the platform?

- Infrastructure & Performance – will compute, storage and latency scale with demand

Each question presents four anchor statements (0 – 3 points), so teams can score themselves with and understand where they succeed and where they have room for improvement in under two hours. Tally the points and you’ll see instantly whether you’re a Data Initiate, Builder, Driver or Master, plus which fixes will unlock the quickest ROI from Gen-AI.

Ready to find out if your data is AI-ready? Take our questionnaire and see where you stand on the AI readiness curve.

Comparative Landscape: Popular AI Readiness Assessment Frameworks on the Market

Before you score your own data estate, it helps to know how the market is already measuring “AI readiness.”

Consultancies, cloud vendors, and independent researchers have published their own AI maturity models. Some of them are narrowly focused on infrastructure, others also include culture and ethics.

Taken together, they form a useful benchmarking lens, but they are far from interchangeable. For example, Cisco’s global AI Readiness Index finds that only 32 % of companies rate themselves “highly ready” on data fundamentals. But, when Gartner asked people in charge of AI data governance, only 4% of them said that their data was ready.

To stay unbiased, we’ll take a look at several of the most cited AI readiness frameworks and only focus on how each one treats data quality, lineage, and governance.

Deloitte’s AI Readiness Maturity Curve

Deloitte’s guide frames data readiness as a three-step progression: from Tier 3 “Readiness Deficit” to Tier 1 “AI-Ready”. It anchors every step to the FAIR principles of Findability, Accessibility, Interoperability and Reusability. CIOs can mine the rubric for four practical levers that map cleanly to quality, lineage, governance and infrastructure.

What Deloitte adds to your assessment lens

How to apply Deloitte’s model inside your organisation

How to apply Deloitte’s model inside your organisation

Baseline against the tiers.

- Tier 3 (Deficit): No complete inventory; ad-hoc extracts.

- Tier 2 (Maturity): Inventory exists but is manual; quality rules are patchy.

- Tier 1 (AI-Ready): Inventory auto-updates, lineage graph traceable in <5 clicks, privacy controls codified.

Run a FAIR gap workshop.

Ask domain owners to score each FAIR attribute 0-4 for their critical datasets. Use evidence (catalogue entries, quality dashboards) rather than opinion.

Prioritise findability → lineage → quality in that order.

Deloitte stresses that stakeholders must find and understand data before they can cleanse it. Automate catalogue + lineage capture first; quality rules follow.

Codify transformation & security together.

Build cleansing, anonymisation and access policies into the same pipeline stage. That moves you simultaneously up the Quality and Governance ladders.

Re-score after every major AI release.

The rubric is designed for iteration; set a quarterly cadence so the board sees measurable progress toward Tier 1.

Cisco’s AI Readiness Questionnaire

Cisco’s questionnaire walks respondents through six domains, but three of them, namely Infrastructure, Data, and Governance, contain the hard questions every CIO should answer before funding the next AI sprint. Each question offers four tick-box statements that effectively form a 0-to-3 scale; by transcribing those answers into a spreadsheet you can build a simple heat map of where data risk will spike first.

What Cisco adds to your assessment lens

How to apply Cisco’s questionnaire to quality, lineage, governance & infrastructure

How to apply Cisco’s questionnaire to quality, lineage, governance & infrastructure

Run the survey verbatim, but capture evidence.

For each answer, attach artefacts—latency dashboards, lineage graphs, policy documents—so scores are defensible.

Translate options into numeric scores.

Cisco’s four answer choices map cleanly to 0 (“Not scalable at all”) through 3 (“Fully scalable; can handle any AI workload”). A quick pivot table highlights red zones.

Slice results by pillar

- Quality: answers to pre-processing and accuracy-verification questions.

- Lineage: origin-tracking and provenance prompts.

- Governance: bias detection, anonymisation, breach readiness.

- Infrastructure: scalability, GPU integration, latency, security.

Prioritise fixes where risk intersects cost.

If Infrastructure Latency scores 1 while Governance Bias scores 2, upgrade network fabric first, and you’ll unlock every downstream model iteration and reduce cloud overruns.

FIvetran’s “Primer” for Data Readiness for GenAI

Fivetran’s guide is blunt: “Generative AI is only as good as its data.” It narrows readiness to two non-negotiables:

- Automated, reliable data movement from every source into a single cloud warehouse, lake, or lakehouse.

- Rigorous governance: knowing, protecting and granting access to that data.

Fivetran treats pipelines and policies as the primary workload, while the model is secondary.

What the primer adds to your assessment lens

How to apply Fivetran’s playbook inside your organisation

How to apply Fivetran’s playbook inside your organisation

Audit ingestion automation.

Count manual scripts or exports vs connector-based loads; anything manual is a bottleneck when data volume or schema change spikes.

Instrument quality at the destination.

Add transformation tests that fail the pipeline if null rates or duplication breach SLAs. Surface those metrics on the dashboard.

Capture lineage once, reuse everywhere.

Deploy an open-metadata collector that writes to both your catalog and the vector database index: one lineage graph, multiple consumers.

Extend your core data team with domain experts

Fivetran flags the need for a “scaled analytics organisation”. Embed domain data leads in business units to own quality rules and prompt libraries for GenAI use cases.

Actian GenAI Data Readiness Checklist

Actian’s two-page checklist is less a maturity model than a guided interrogation. It lists the questions every stakeholder must be able to answer before a Gen-AI pilot moves forward.

What the checklist adds to your data-estate assessment

How to apply Actian’s checklist inside your organisation

How to apply Actian’s checklist inside your organisation

Map questions to owners.

Use the stakeholder matrix to assign each prompt to a named business user, data scientist, data engineer, or data steward. Record answers and evidence in a shared workspace.

Score evidence, not opinions.

For every prompt, grade readiness 0-4 based on artefacts (schemas, lineage graphs, SLAs). A “4” on data quality requires live dashboards showing error rates, not just a verbal assurance.

Surface immediate gaps.

- Missing lineage evidence → deploy open-metadata collectors.

- No clear governance workflow → institute a data-product approval gate that checks PII tagging and access controls.

Tie fixes to risk and speed.

The checklist invites you to weigh “moving too quickly vs too slowly.” Quantify the cost of a hallucination-driven decision versus the cost of waiting, then prioritise controls that both shrink risk and accelerate deployment (e.g., automated quality gates in the ingestion layer).

Treat the checklist as a living artifact

Use the checklist as a pre-flight inspection that must be passed every time the model, data source, or regulation changes.

After scoring readiness, compare top data engineering companies to plan next steps with a delivery partner.

Common Data Pitfalls and Quick Wins

Even in well-funded AI programmes, it’s the small, hidden issues that derail momentum. The four pitfalls below crop up in almost every readiness assessment we run; the good news is each has a half-day fix that delivers outsized confidence and clears the runway for bigger data-estate upgrades.

Pitfall #1 – No Freshness Indicator

Teams pull data they think is “live” only to learn it hasn’t been updated in days. That delay quietly skews model outputs and erodes trust.

Quick win – Add a “last-loaded” timestamp column (or badge) to the ten most-used tables and surface it in your BI tool. In half a day you give every analyst and data scientist an instant freshness check without a pipeline rebuild required.

Pitfall #2 – Orphan Tables Without an Owner

When no one is named on a dataset, quality issues linger and lineage stops cold. The result is endless Slack pings asking, “Who owns this table?”

Quick win – Run a catalogue query to list tables with a blank owner field, then spend one meeting assigning a single contact per table. It’s low-effort, and from tomorrow any data question has a human routing path.

Pitfall #3 – Ad-Hoc Access Requests

Engineers still grant data access by manual ticket, creating slowdowns and audit headaches. Every new AI use case means another approval queue.

Quick win – Convert your three most-requested datasets to role-based access in IAM and document the roles in the catalogue. Overnight, repeat requests disappear and auditors see a clear, codified permission trail.

Pitfall #4 – Silent Schema Changes

A new column or data-type tweak slips into a source system, silently breaking downstream joins and model features—the team only notices when a dashboard goes blank.

Quick win – Enable a lightweight schema-change alert (e.g., a dbt or Fivetran notification to Slack) on your top data sources; it takes under an hour and gives everyone real-time heads-up before errors cascade.

What’s the next move: From Self-Assessment to Action

Now you know how to benchmark your data estate; the question is how to turn today’s scores into a month-by-month execution plan without stalling day-to-day operations.

The answer is a detailed roadmap that ladders incremental fixes directly to the AI use cases the board is funding. We’ve broken that journey down step-by-step in a separate, in-depth guide; if you need a practical template that slots straight into your next budgeting cycle, jump to the full roadmap here →.

Struggling with patchy data pipelines or a lean AI bench? Vodworks’ AI Readiness program bridges the gap. We kick off with a targeted workshop to nail the AI use cases that promise near-term ROI, then run a readiness audit covering data quality, lineage, governance, and infrastructure.

You’ll walk away with:

- A maturity scorecard tied to the checklist in this article.

- A gap-by-gap action plan with cost and timeline.

- Board-ready numbers that justify investment.

Prefer hands-on help? The same experts can stay to clean data, modernise architecture, and operationalise models, so your first production use case ships on time and within guardrails.

Book a 30-minute discovery call with a Vodworks AI solution architect to review your data estate, prioritise use-cases, and map the next steps that match your current maturity level.

Talent Shortage Holding You Back? Scale Fast With Us

Frequently Asked Questions

In what industries can Web3 technology be implemented?

Web3 technology finds applications across various industries. In Retail marketing Web3 can help create engaging experiences with interactive gamification and collaborative loyalty. Within improving online streaming security Web3 technologies help safeguard content with digital subscription rights, control access, and provide global reach. Web3 Gaming is another direction of using this technology to reshape in-game interactions, monetize with tradable assets, and foster active participation in the gaming community. These are just some examples of where web3 technology makes sense however there will of course be use cases where it doesn’t. Contact us to learn more.

How do you handle different time zones?

With a team of 150+ expert developers situated across 5 Global Development Centers and 10+ countries, we seamlessly navigate diverse timezones. This gives us the flexibility to support clients efficiently, aligning with their unique schedules and preferred work styles. No matter the timezone, we ensure that our services meet the specific needs and expectations of the project, fostering a collaborative and responsive partnership.

What levels of support do you offer?

We provide comprehensive technical assistance for applications, providing Level 2 and Level 3 support. Within our services, we continuously oversee your applications 24/7, establishing alerts and triggers at vulnerable points to promptly resolve emerging issues. Our team of experts assumes responsibility for alarm management, overseas fundamental technical tasks such as server management, and takes an active role in application development to address security fixes within specified SLAs to ensure support for your operations. In addition, we provide flexible warranty periods on the completion of your project, ensuring ongoing support and satisfaction with our delivered solutions.

Who owns the IP of my application code/will I own the source code?

As our client, you retain full ownership of the source code, ensuring that you have the autonomy and control over your intellectual property throughout and beyond the development process.

How do you manage and accommodate change requests in software development?

We seamlessly handle and accommodate change requests in our software development process through our adoption of the Agile methodology. We use flexible approaches that best align with each unique project and the client's working style. With a commitment to adaptability, our dedicated team is structured to be highly flexible, ensuring that change requests are efficiently managed, integrated, and implemented without compromising the quality of deliverables.

What is the estimated timeline for creating a Minimum Viable Product (MVP)?

The timeline for creating a Minimum Viable Product (MVP) can vary significantly depending on the complexity of the product and the specific requirements of the project. In total, the timeline for creating an MVP can range from around 3 to 9 months, including such stages as Planning, Market Research, Design, Development, Testing, Feedback and Launch.

Do you provide Proof of Concepts (PoCs) during software development?

Yes, we offer Proof of Concepts (PoCs) as part of our software development services. With a proven track record of assisting over 70 companies, our team has successfully built PoCs that have secured initial funding of $10Mn+. Our team helps business owners and units validate their idea, rapidly building a solution you can show in hand. From visual to functional prototypes, we help explore new opportunities with confidence.

Are we able to vet the developers before we take them on-board?

When augmenting your team with our developers, you have the ability to meticulously vet candidates before onboarding. We ask clients to provide us with a required developer’s profile with needed skills and tech knowledge to guarantee our staff possess the expertise needed to contribute effectively to your software development projects. You have the flexibility to conduct interviews, and assess both developers’ soft skills and hard skills, ensuring a seamless alignment with your project requirements.

Is on-demand developer availability among your offerings in software development?

We provide you with on-demand engineers whether you need additional resources for ongoing projects or specific expertise, without the overhead or complication of traditional hiring processes within our staff augmentation service.

Do you collaborate with startups for software development projects?

Yes, our expert team collaborates closely with startups, helping them navigate the technical landscape, build scalable and market-ready software, and bring their vision to life.

Our startup software development services & solutions:

- MVP & Rapid POC's

- Investment & Incubation

- Mobile & Web App Development

- Team Augmentation

- Project Rescue

Subscribe to our blog

Related Posts

Get in Touch with us

Thank You!

Thank you for contacting us, we will get back to you as soon as possible.

Our Next Steps

- Our team reaches out to you within one business day

- We begin with an initial conversation to understand your needs

- Our analysts and developers evaluate the scope and propose a path forward

- We initiate the project, working towards successful software delivery